Dynamic Dollar Cost Averaging into Bitcoin

Objective of this anlysis is to set the stage for the development of a quantitative appoach to dollar cost averaging into Bitcoin.

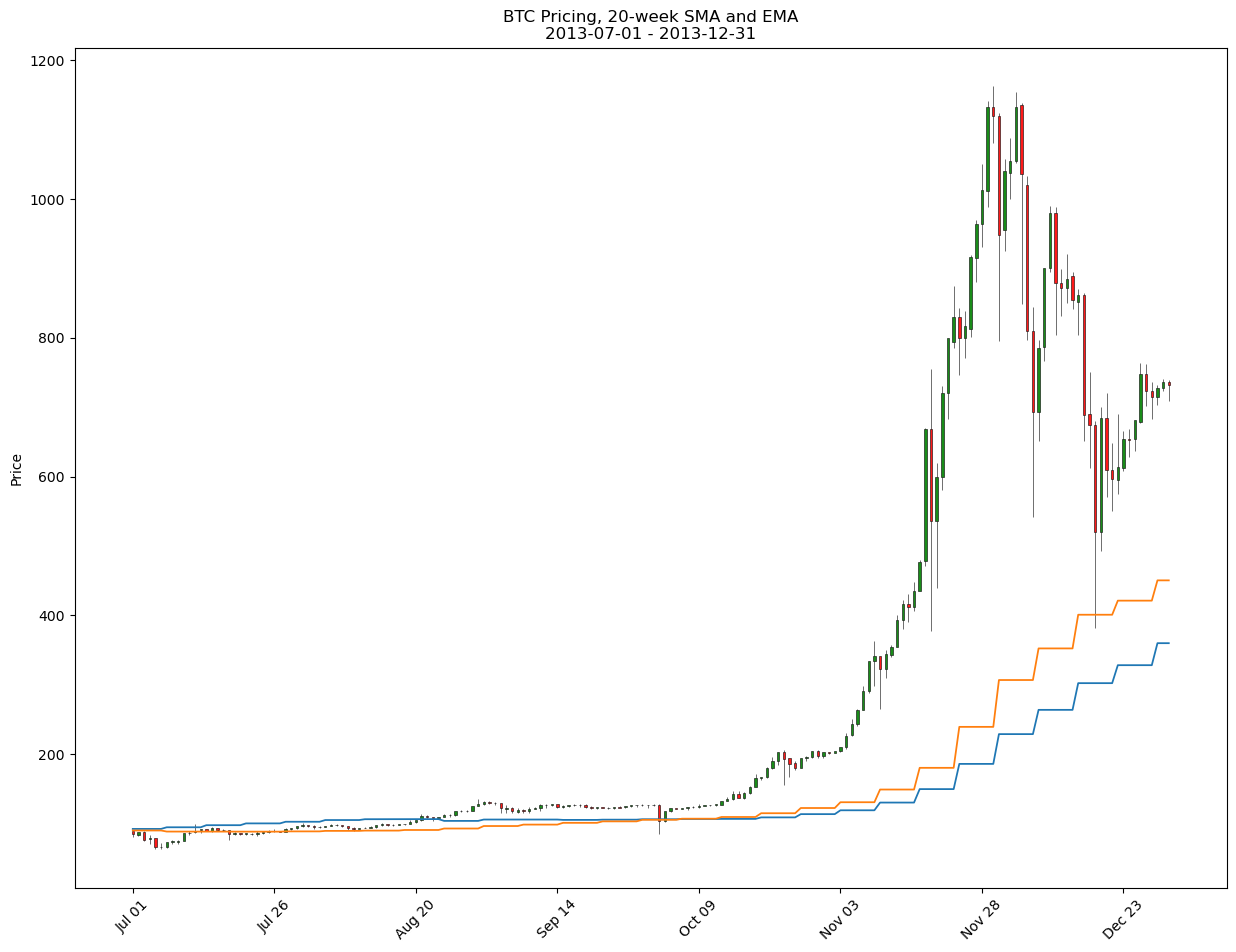

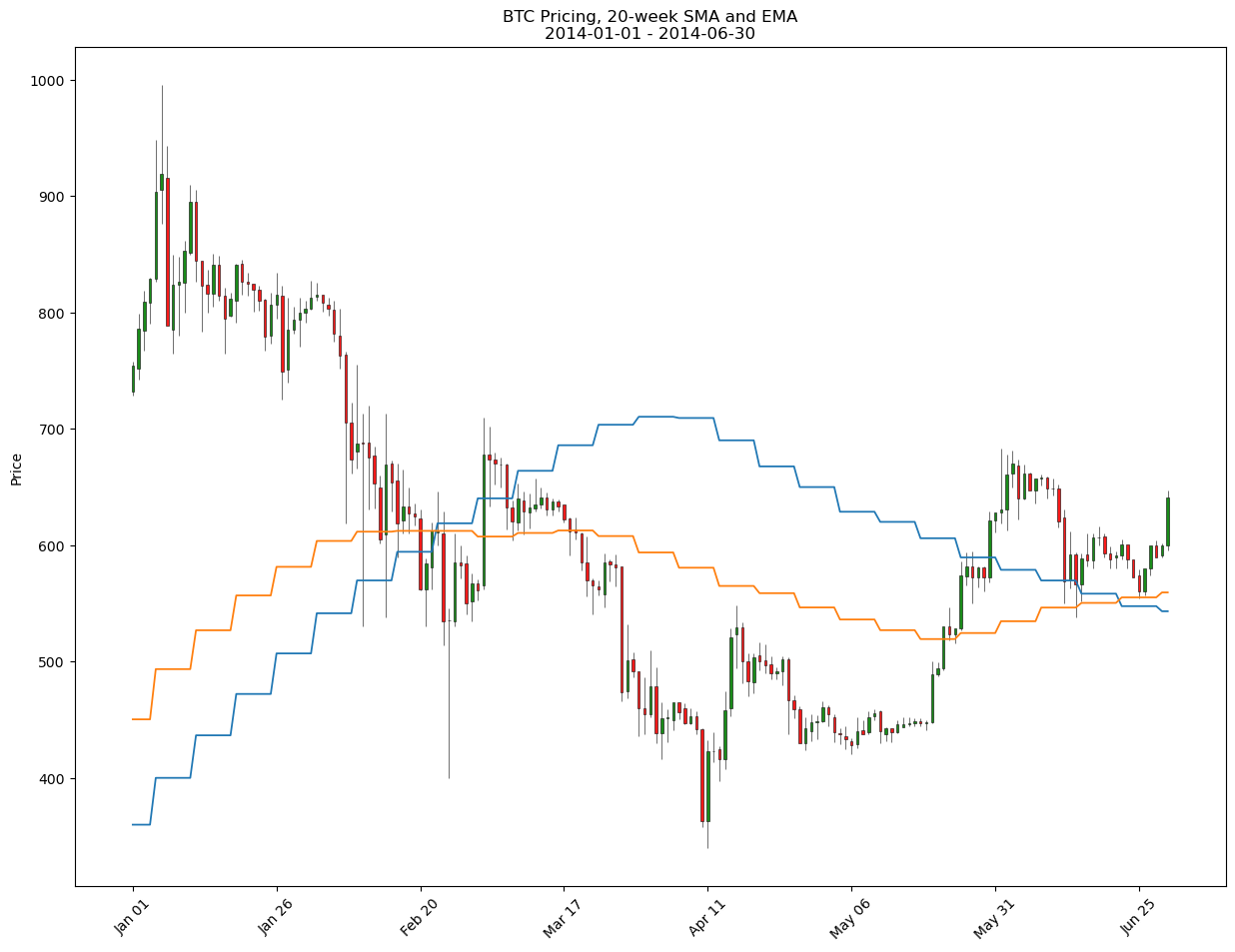

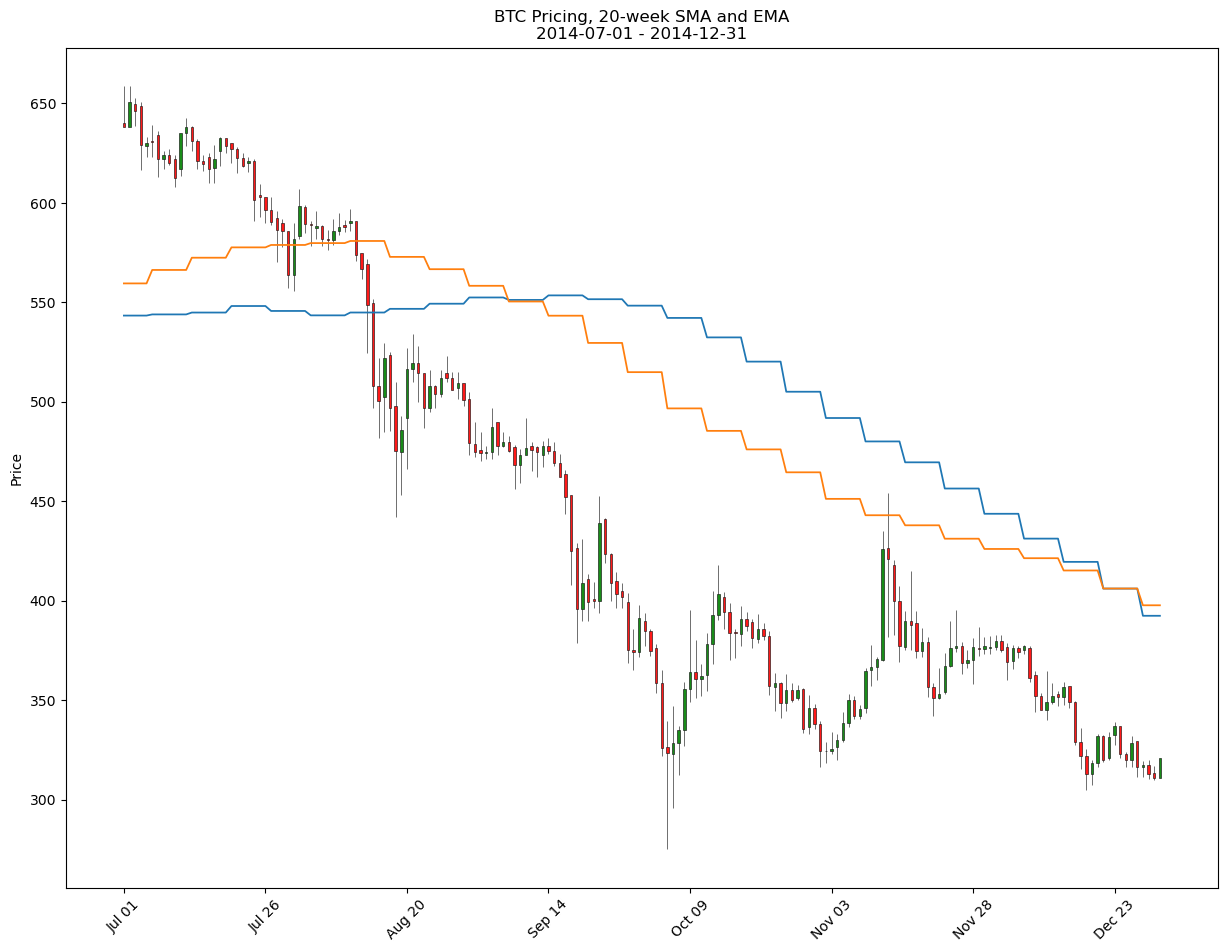

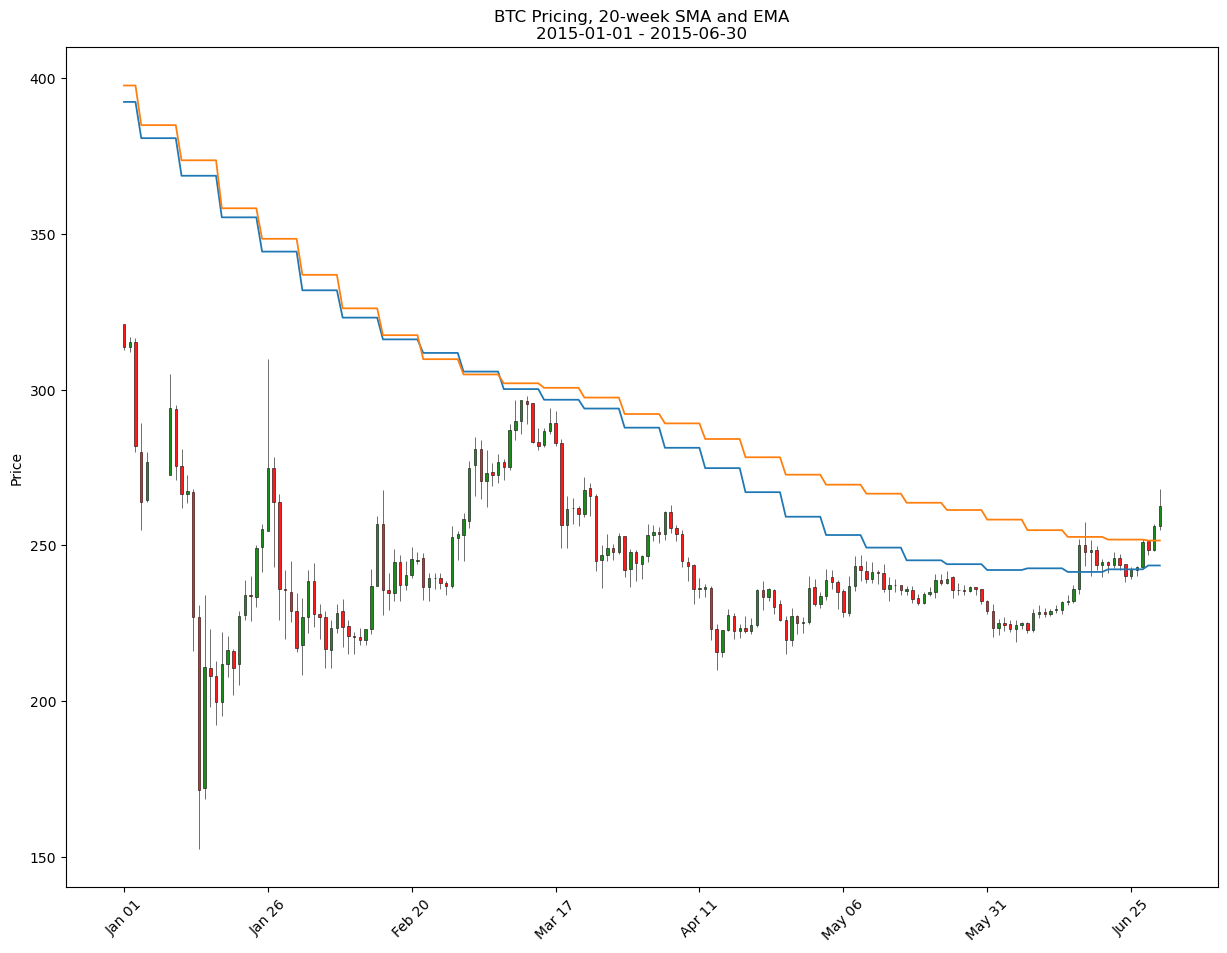

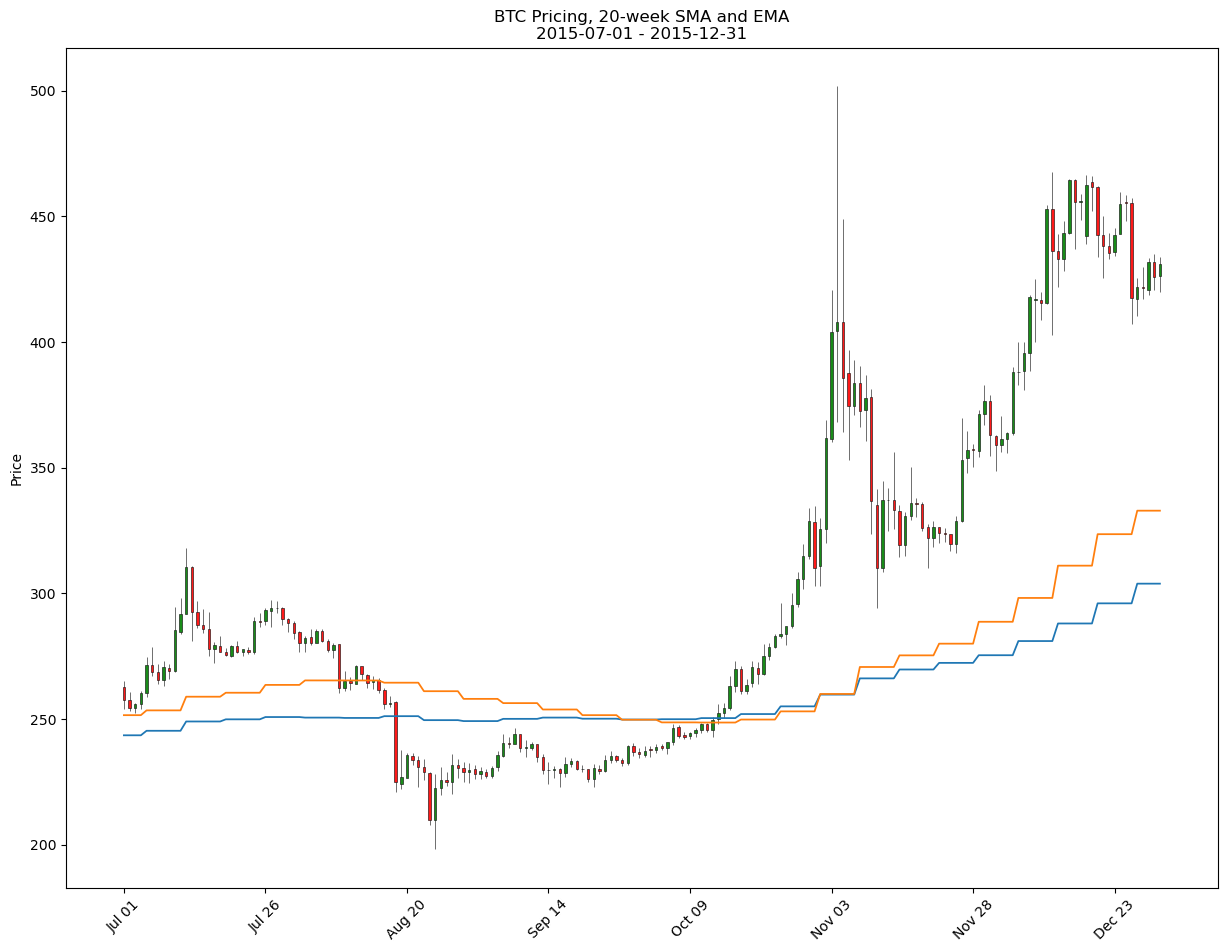

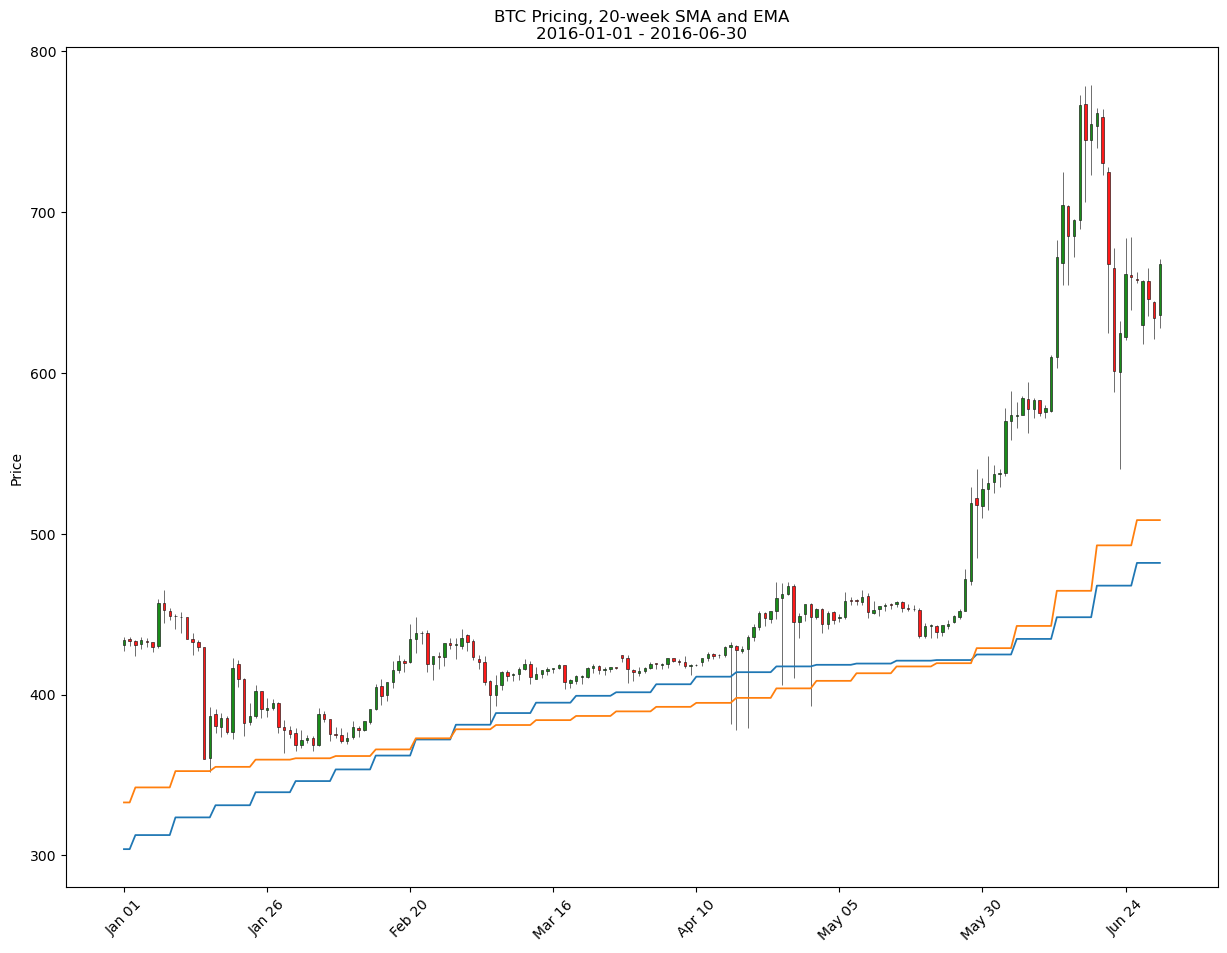

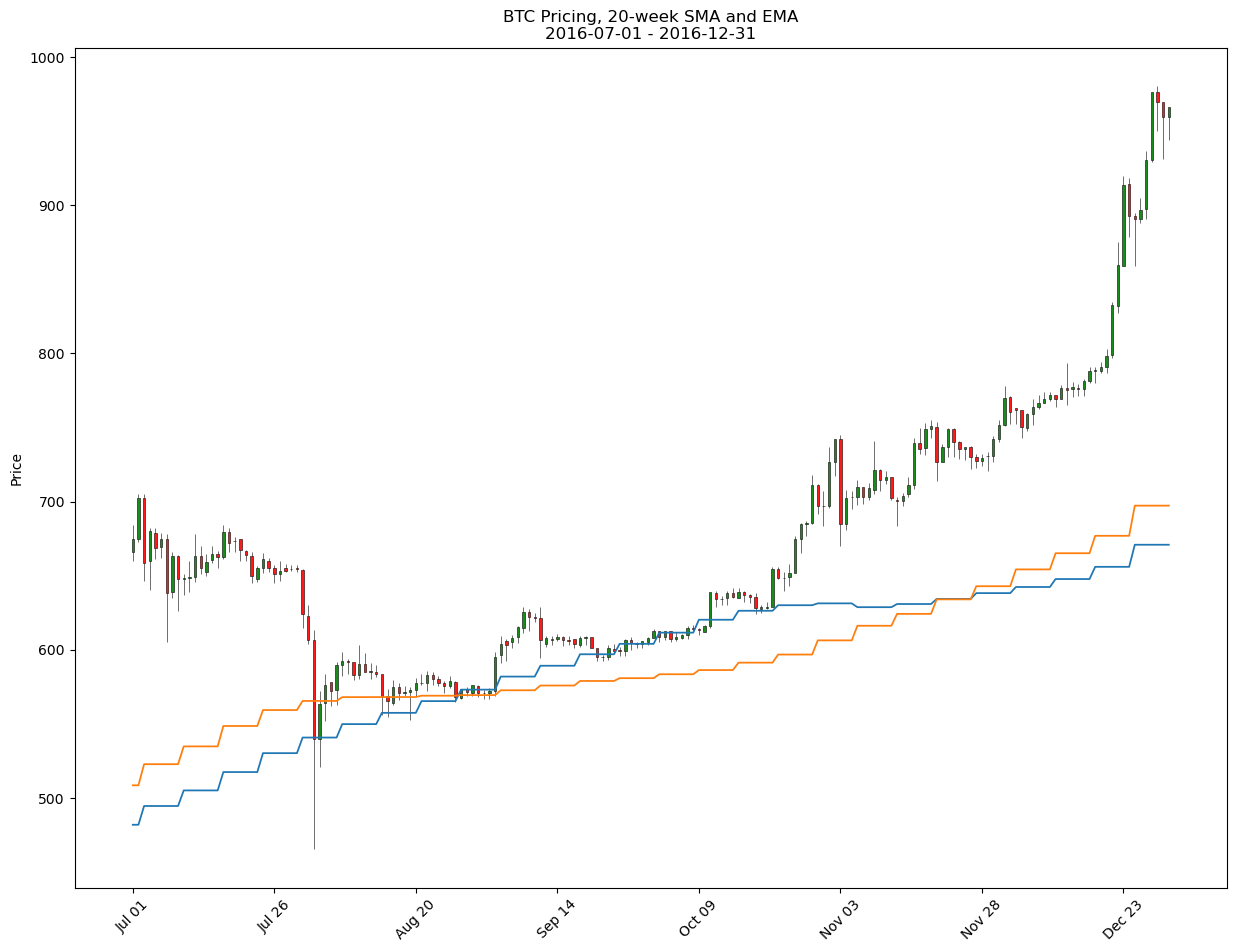

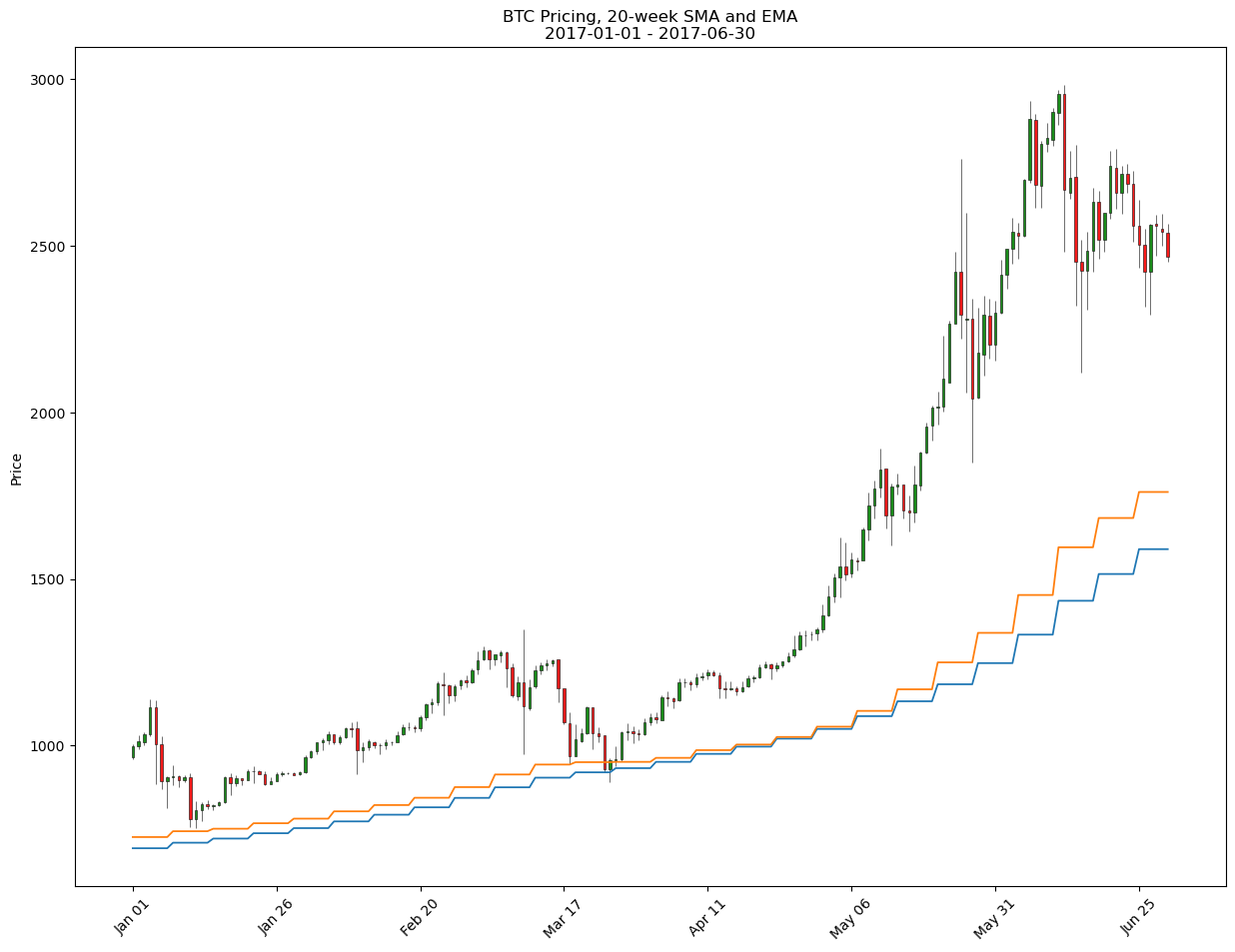

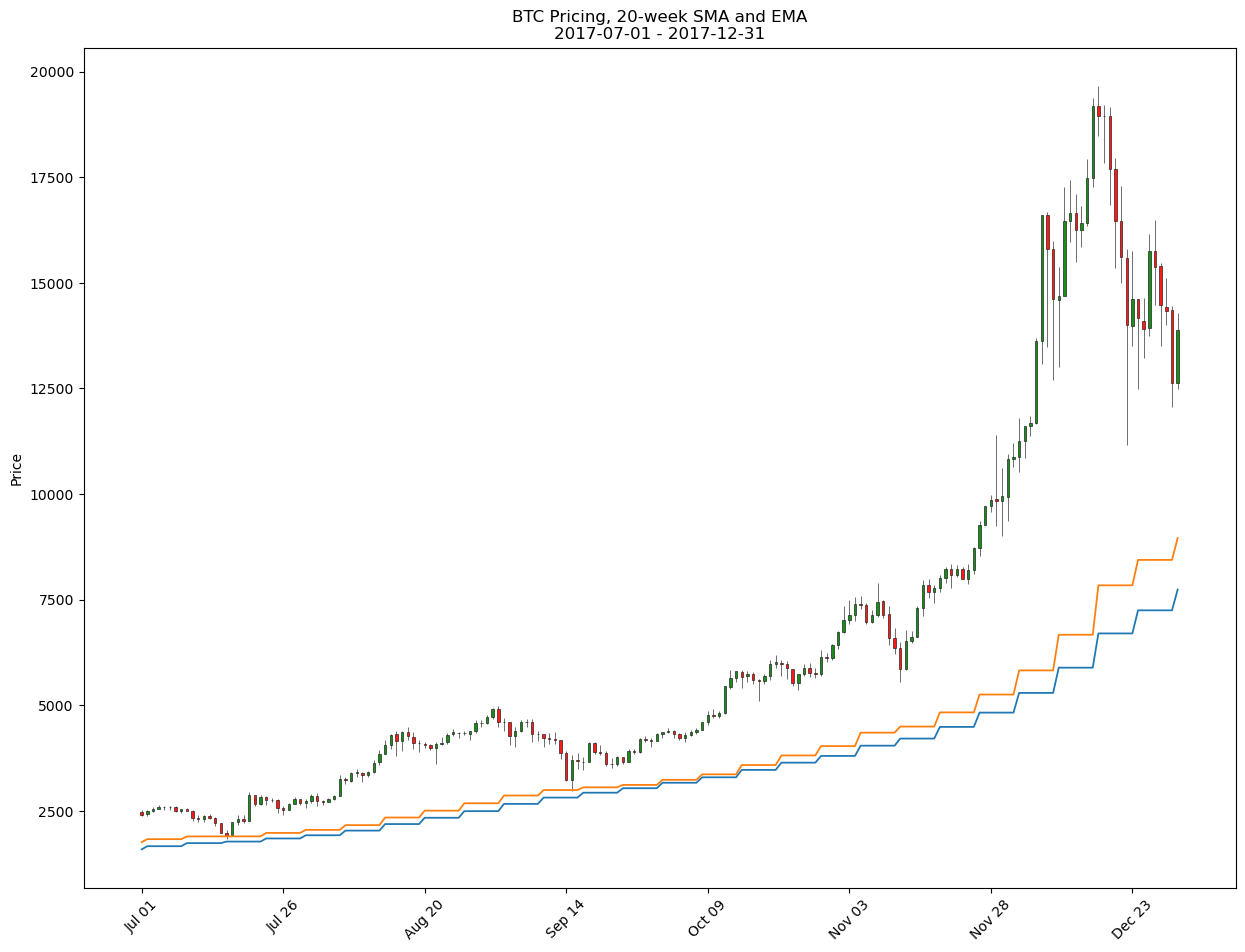

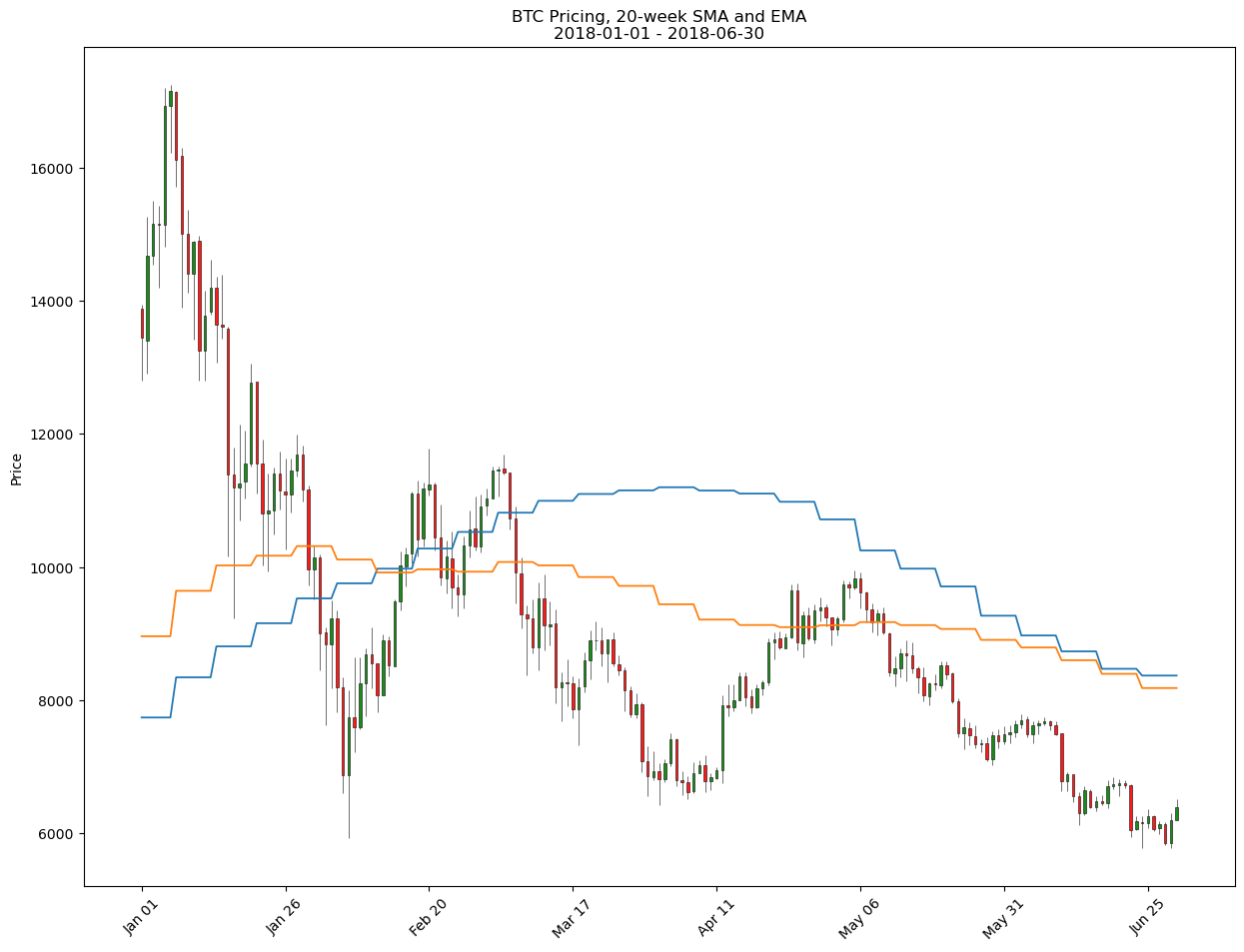

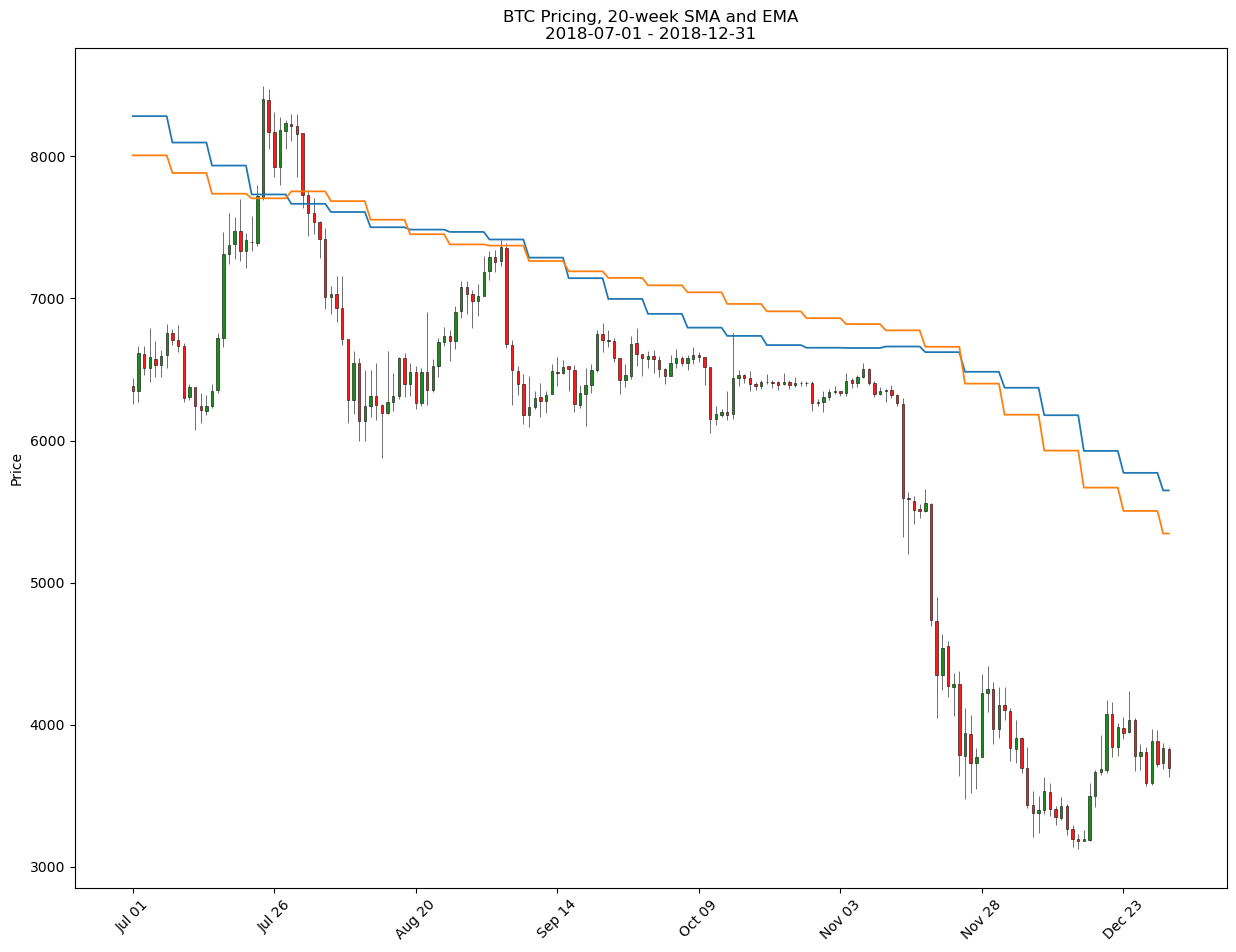

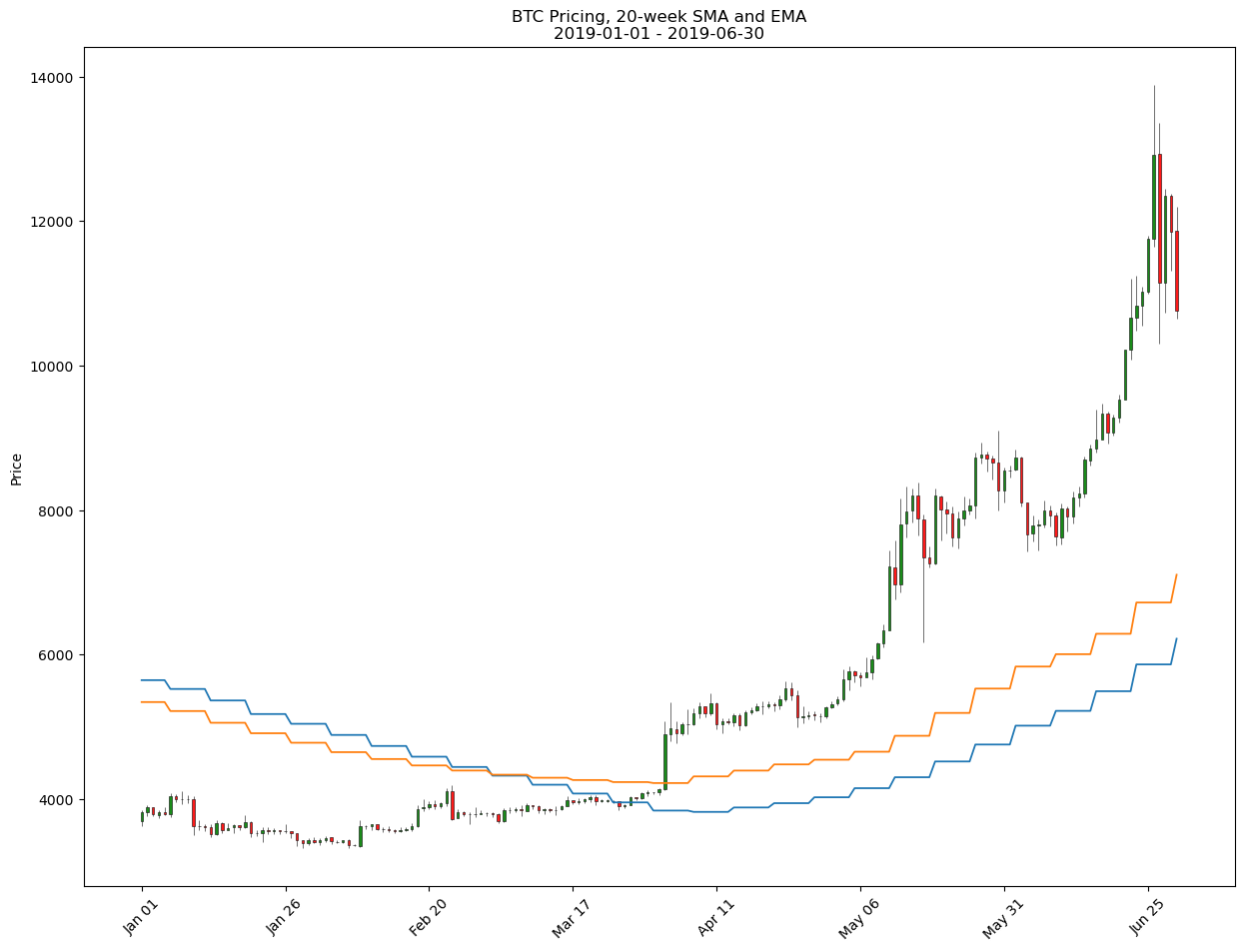

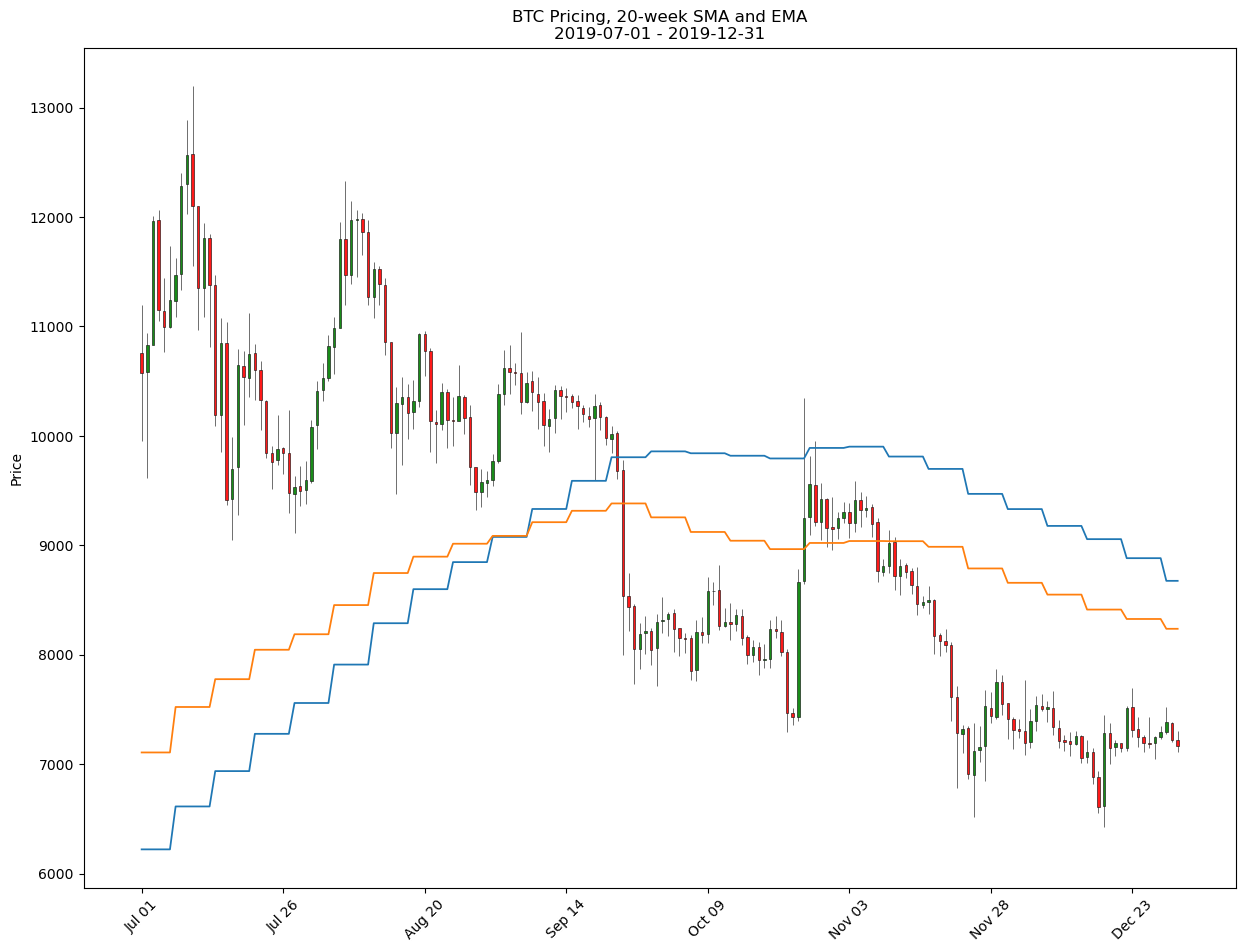

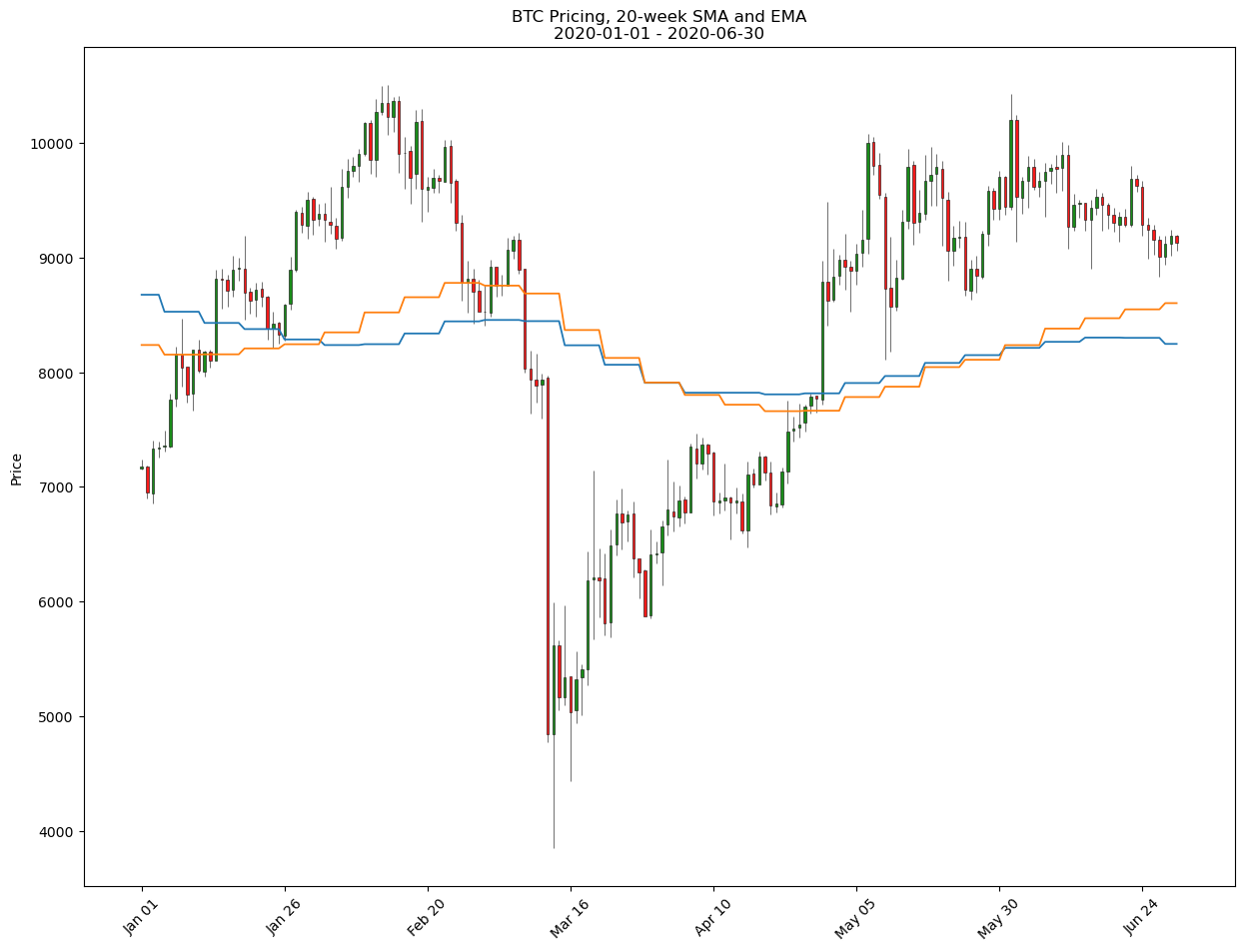

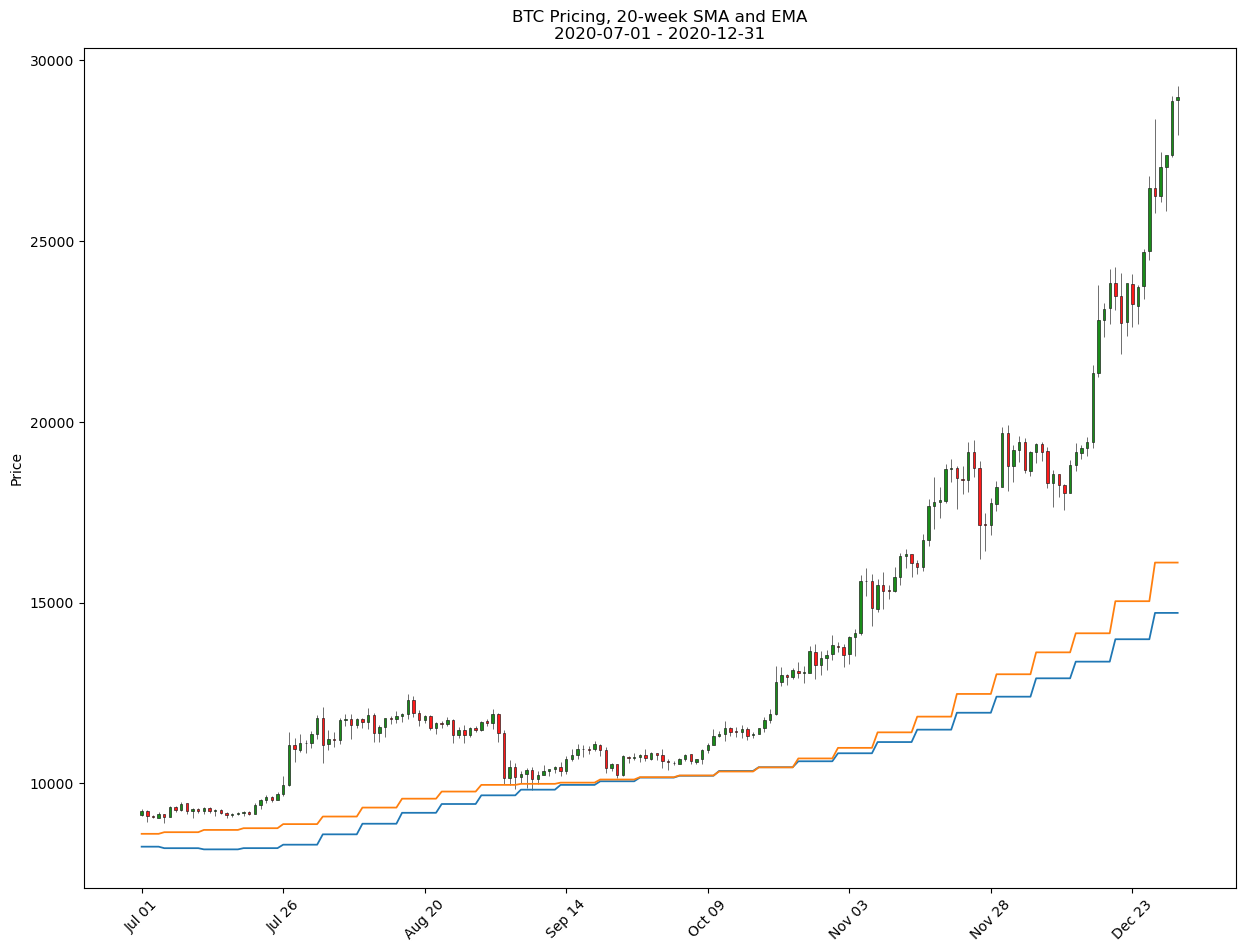

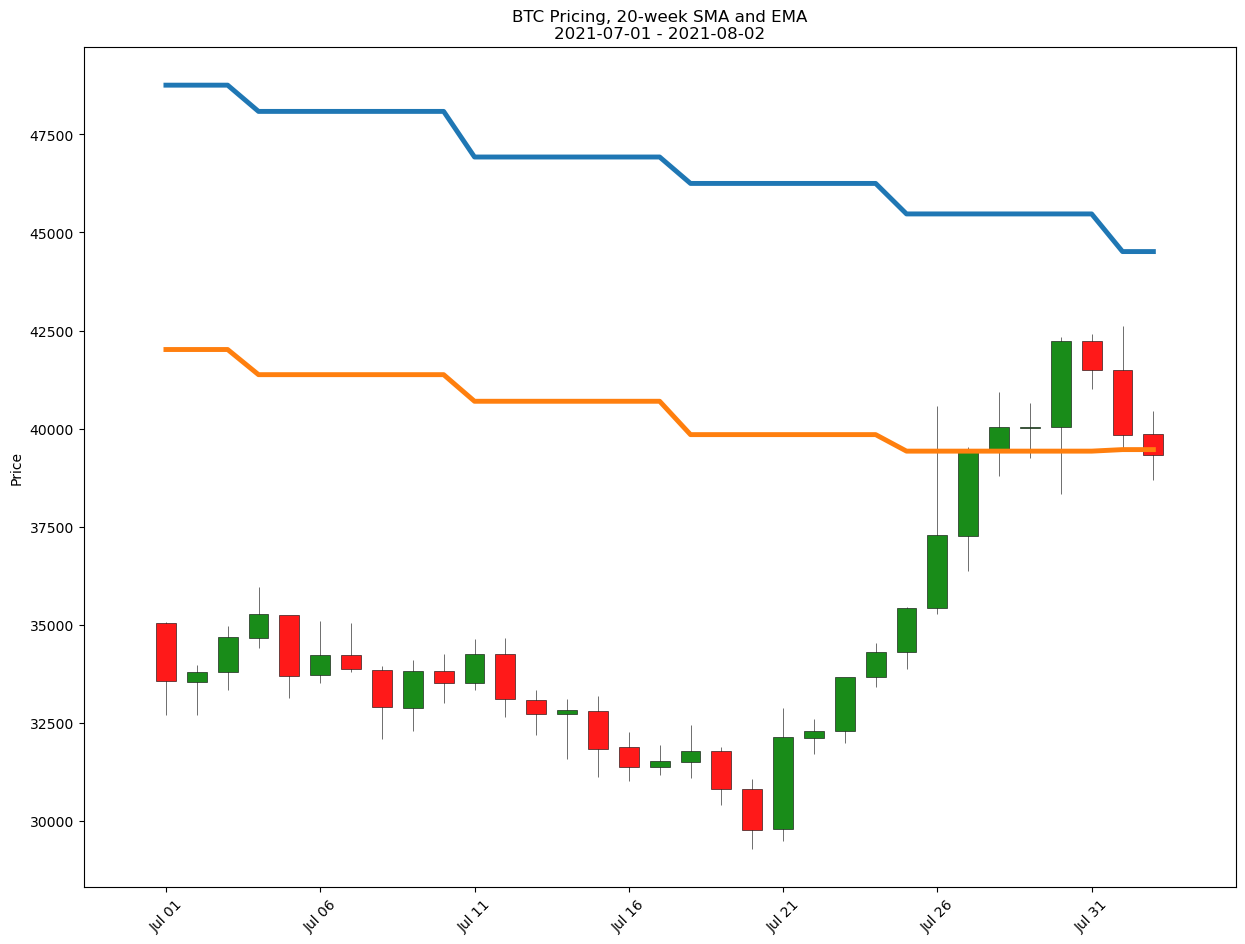

The methodology will be based on daily deviations from the 20-week “Simple Moving Average,” which is the average price of an asset over the previous 20 weeks. The basic idea is that prices that are significantly below the 20-week SMA indicate an oversold condition, and prices significantly above it indicate an overbought condition.

import datetime as dt

import pandas as pd

import matplotlib.pyplot as plt

import mplfinance as mpf

import numpy as np

import matplotlib as mpl

The data is taken from a tradingview.com export of Bitcoin pricing from the Bitstamp exchange. The data includes BTC pricing info (Open, High, Low, and Close) and volume (daily, Volume, and 20-day simple moving average, Volume MA) for over 3600 days since August 18, 2011.

The data also includes a 20-week week simple moving average, MA, and exponential moving average, EMA, as calculated by tradingview.com. Since these are calculated weekly and as an average of the past 20 weeks one value is available per week after 20 weeks have elapsed in the raw data.

Open Raw Data

d = pd.read_csv('dynamic-dollar-cost-averaging-into-bitcoin/BITSTAMP_BTCUSD, 1D.csv')

d.info()

d.head()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 3605 entries, 0 to 3604

Data columns (total 9 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 time 3605 non-null object

1 open 3605 non-null float64

2 high 3605 non-null float64

3 low 3605 non-null float64

4 close 3605 non-null float64

5 EMA 502 non-null float64

6 MA 502 non-null float64

7 Volume 3605 non-null float64

8 Volume MA 3586 non-null float64

dtypes: float64(8), object(1)

memory usage: 253.6+ KB

| time | open | high | low | close | EMA | MA | Volume | Volume MA | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 2011-08-18T00:00:00Z | 10.90 | 10.90 | 10.90 | 10.90 | NaN | NaN | 0.489908 | NaN |

| 1 | 2011-08-19T00:00:00Z | 11.85 | 11.85 | 11.15 | 11.69 | NaN | NaN | 1.926578 | NaN |

| 2 | 2011-08-20T00:00:00Z | 11.70 | 11.70 | 11.70 | 11.70 | NaN | NaN | 0.085470 | NaN |

| 3 | 2011-08-21T00:00:00Z | 11.70 | 11.70 | 11.70 | 11.70 | NaN | NaN | 0.085470 | NaN |

| 4 | 2011-08-22T00:00:00Z | 11.70 | 11.70 | 11.70 | 11.70 | NaN | NaN | 0.028632 | NaN |

Basic Cleansing

- Convert “time” to “date”

- Capture day of week

dow - Identify any missing days by merging into a complete list of dates from the start to end date

- Peel off

Open,High,Low,Closedata to use for candlesticks - Subset to just the minimally necessary columns

d['date'] = pd.to_datetime(d['time'])

complete_date_list = pd.DataFrame(

pd.date_range(d['date'].min(),

d['date'].max()),

columns=['date'])

d = (pd.merge(complete_date_list, d,

on=['date'],

how='left')

.reset_index(drop=True))

ohlc = (d[['date','open','high','low','close']]

.set_index('date'))

ohlc.info()

<class 'pandas.core.frame.DataFrame'>

DatetimeIndex: 3638 entries, 2011-08-18 00:00:00+00:00 to 2021-08-02 00:00:00+00:00

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 open 3605 non-null float64

1 high 3605 non-null float64

2 low 3605 non-null float64

3 close 3605 non-null float64

dtypes: float64(4)

memory usage: 142.1 KB

d['dow'] = pd.to_datetime(d['date']).dt.dayofweek

d = (d[['date','dow','open','high','low','close','MA','EMA']]

.reset_index(drop=True))

d.info()

<class 'pandas.core.frame.DataFrame'>

RangeIndex: 3638 entries, 0 to 3637

Data columns (total 8 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 date 3638 non-null datetime64[ns, UTC]

1 dow 3638 non-null int64

2 open 3605 non-null float64

3 high 3605 non-null float64

4 low 3605 non-null float64

5 close 3605 non-null float64

6 MA 502 non-null float64

7 EMA 502 non-null float64

dtypes: datetime64[ns, UTC](1), float64(6), int64(1)

memory usage: 227.5 KB

Exploration

- A total of 33 days are missing pricing information. This is less than 1% of the total values in the dataset, and 30/33 were in 2011.

- The

MAandEMAvalues are available for Sundays (day6).

d[d['close'].isna()]['date'].dt.year.value_counts()

2011 30

2015 3

Name: date, dtype: int64

d[~d['MA'].isna()]['dow'].value_counts()

6 501

0 1

Name: dow, dtype: int64

Calculate a 20-Week Moving Averages using Pandas

- Use Sundays for moving averages, like TradingView.com

- Moving averages calculated in Pandas match those calculated by TradingView.com most of the time. Proceed using the Pandas values.

- Fill the moving averages forward (a given week’s moving average is based on the rolling average price as of the last Sunday).

ma = d[d['dow']==6].copy()

ma['sma_20'] = (ma['close']

.rolling(window=20)

.mean())

ma['ema_20'] = (ma['close']

.ewm(span=20,

min_periods=20,

adjust=False)

.mean())

ma.info()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 520 entries, 3 to 3636

Data columns (total 10 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 date 520 non-null datetime64[ns, UTC]

1 dow 520 non-null int64

2 open 513 non-null float64

3 high 513 non-null float64

4 low 513 non-null float64

5 close 513 non-null float64

6 MA 501 non-null float64

7 EMA 501 non-null float64

8 sma_20 485 non-null float64

9 ema_20 494 non-null float64

dtypes: datetime64[ns, UTC](1), float64(8), int64(1)

memory usage: 44.7 KB

(ma['MA'].round(1)==ma['sma_20'].round(1)).value_counts()

True 485

False 35

dtype: int64

(ma['EMA'].round(1)==ma['ema_20'].round(1)).value_counts()

True 463

False 57

dtype: int64

d = pd.merge(d.drop(columns=['MA','EMA']),

ma.drop(columns=['MA','EMA']),

how='left')

d['sma_20'] = d['sma_20'].fillna(method='ffill')

d['ema_20'] = d['ema_20'].fillna(method='ffill')

d.loc[d['ema_20'] <= d['sma_20'],'comp_20'] = d['ema_20']

d['comp_20'] = d['comp_20'].fillna(d['sma_20'])

d['date'] = d['date'].dt.date

d = d.drop(columns=['dow'])

d.info()

d.tail()

<class 'pandas.core.frame.DataFrame'>

Int64Index: 3638 entries, 0 to 3637

Data columns (total 8 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 date 3638 non-null object

1 open 3605 non-null float64

2 high 3605 non-null float64

3 low 3605 non-null float64

4 close 3605 non-null float64

5 sma_20 3390 non-null float64

6 ema_20 3453 non-null float64

7 comp_20 3390 non-null float64

dtypes: float64(7), object(1)

memory usage: 384.8+ KB

| date | open | high | low | close | sma_20 | ema_20 | comp_20 | |

|---|---|---|---|---|---|---|---|---|

| 3633 | 2021-07-29 | 40024.80 | 40662.80 | 39239.95 | 40037.51 | 45470.5640 | 39427.295350 | 39427.295350 |

| 3634 | 2021-07-30 | 40045.21 | 42335.50 | 38331.37 | 42234.89 | 45470.5640 | 39427.295350 | 39427.295350 |

| 3635 | 2021-07-31 | 42236.72 | 42411.32 | 41007.51 | 41490.13 | 45470.5640 | 39427.295350 | 39427.295350 |

| 3636 | 2021-08-01 | 41489.16 | 42614.85 | 39424.27 | 39839.00 | 44511.6945 | 39466.505317 | 39466.505317 |

| 3637 | 2021-08-02 | 39875.20 | 40459.31 | 38687.62 | 39335.62 | 44511.6945 | 39466.505317 | 39466.505317 |

Calculate Composite % Delta between Daily Price and Weekly Moving Averages

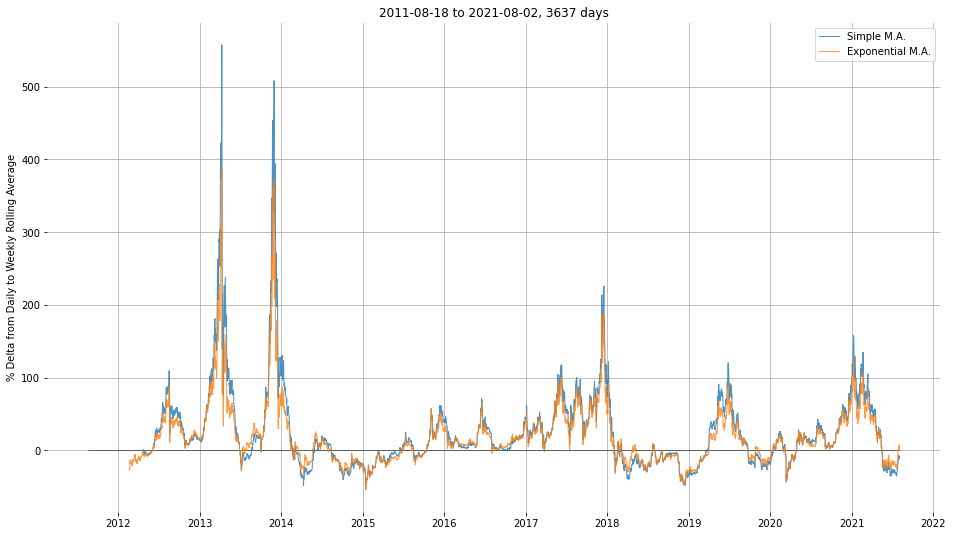

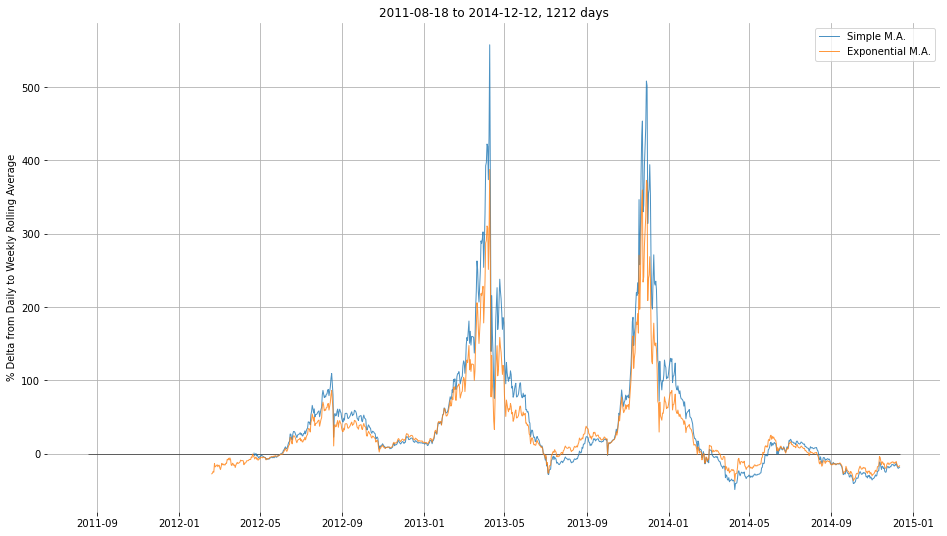

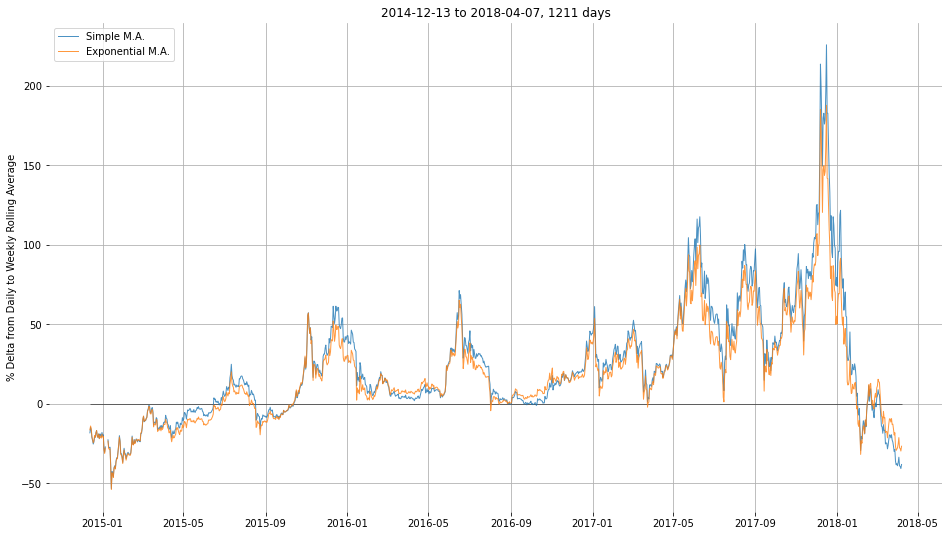

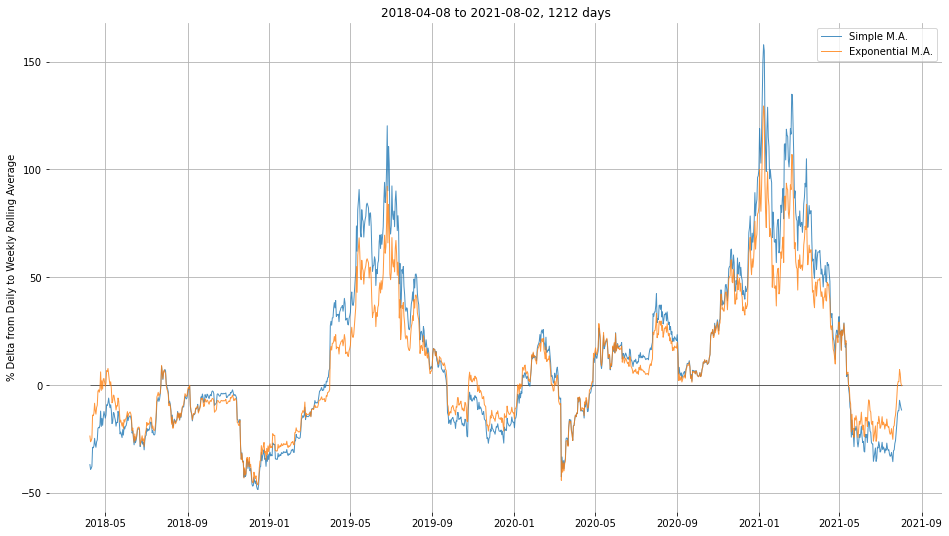

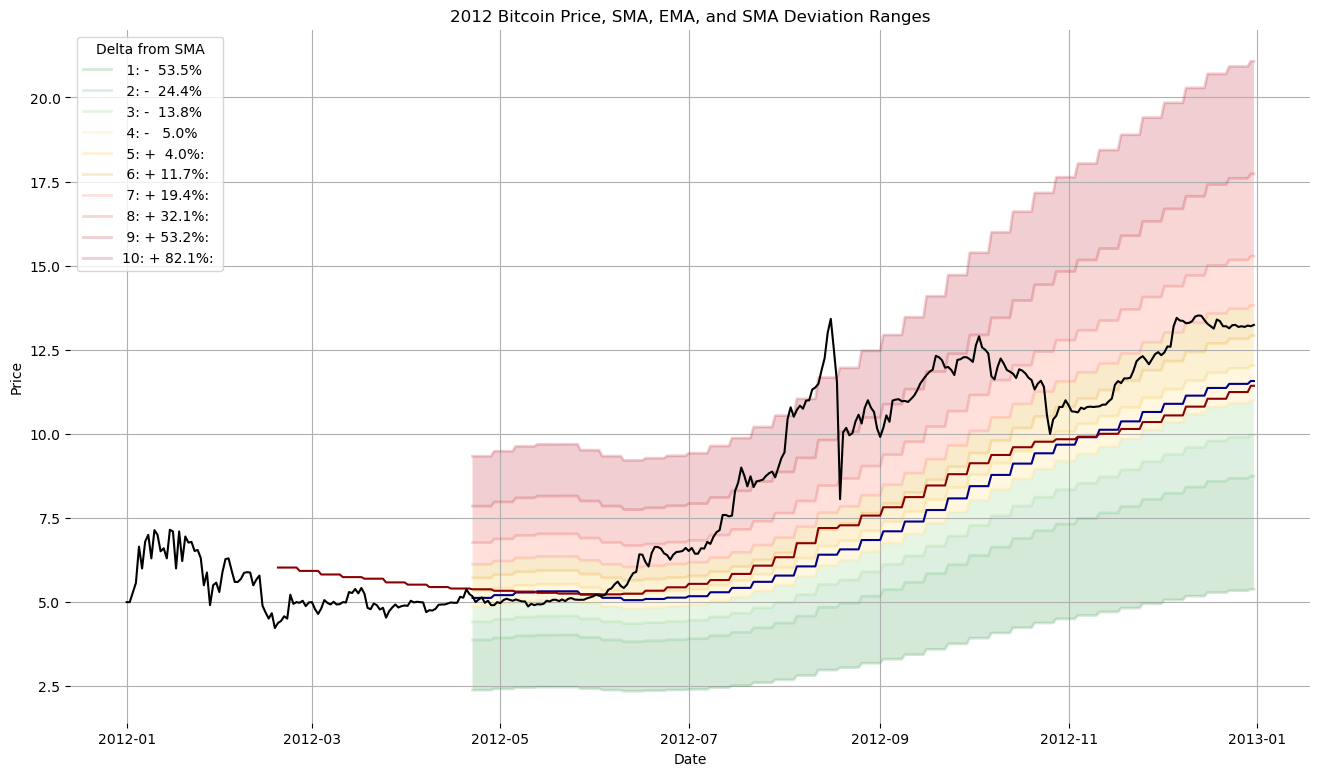

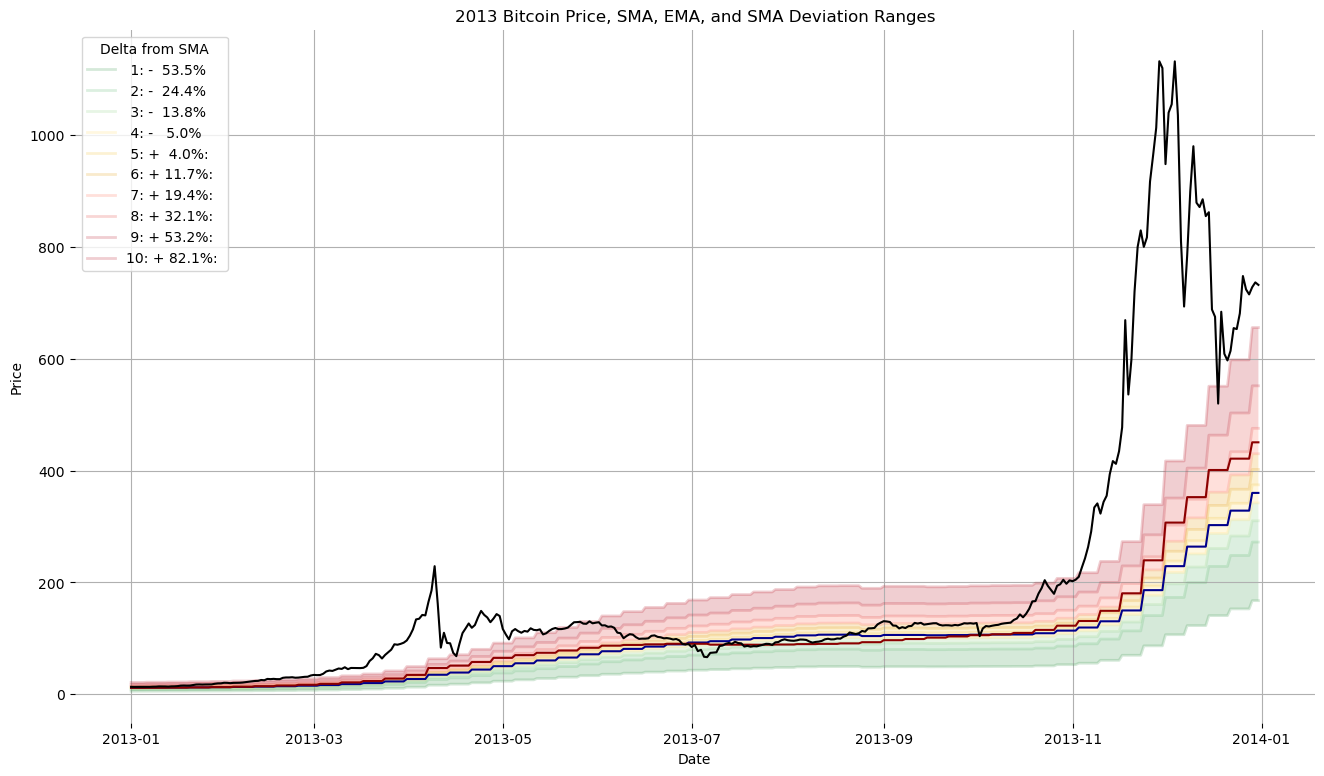

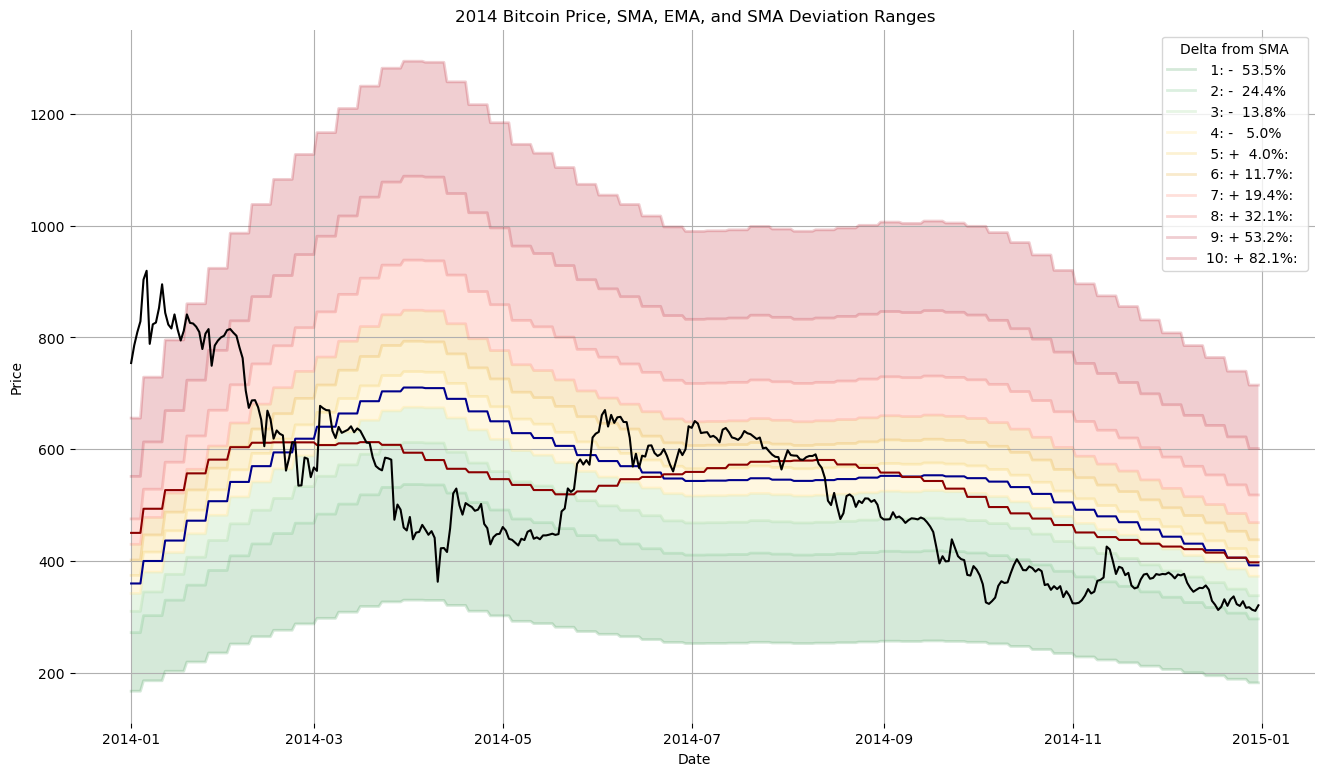

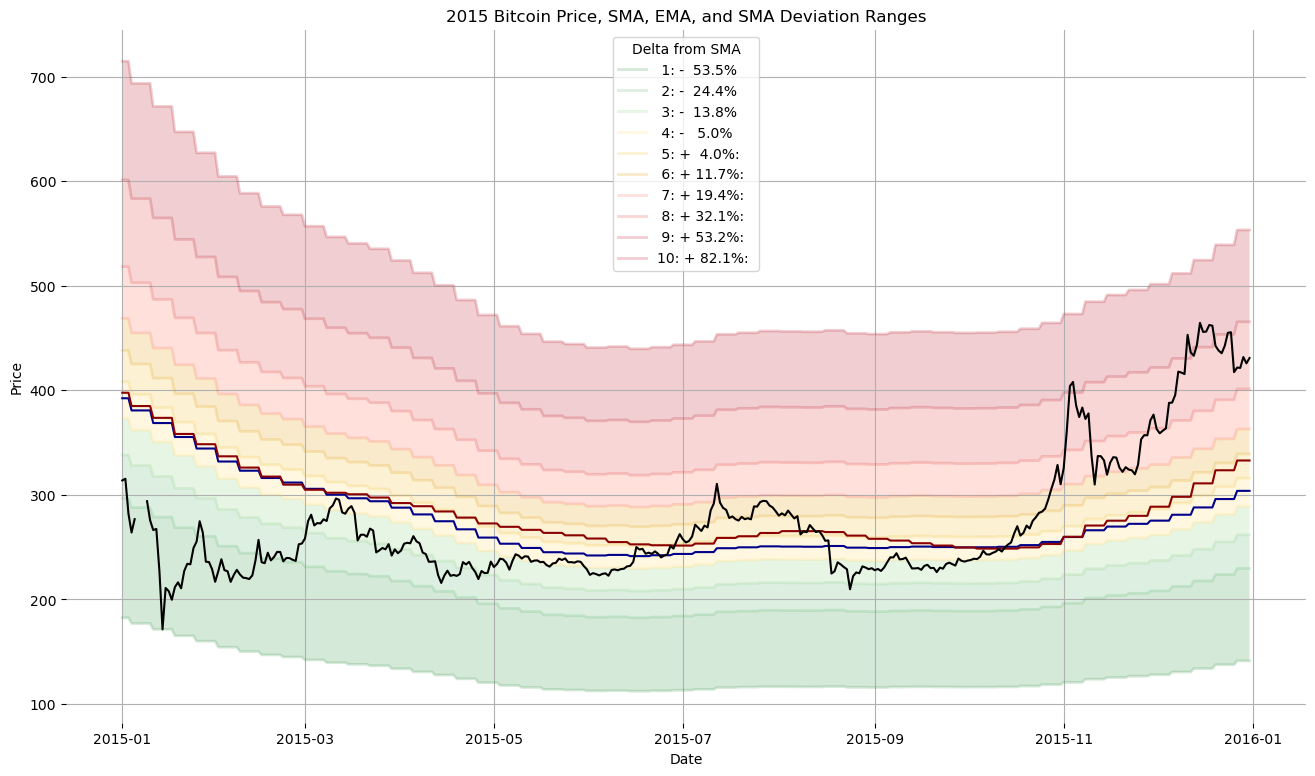

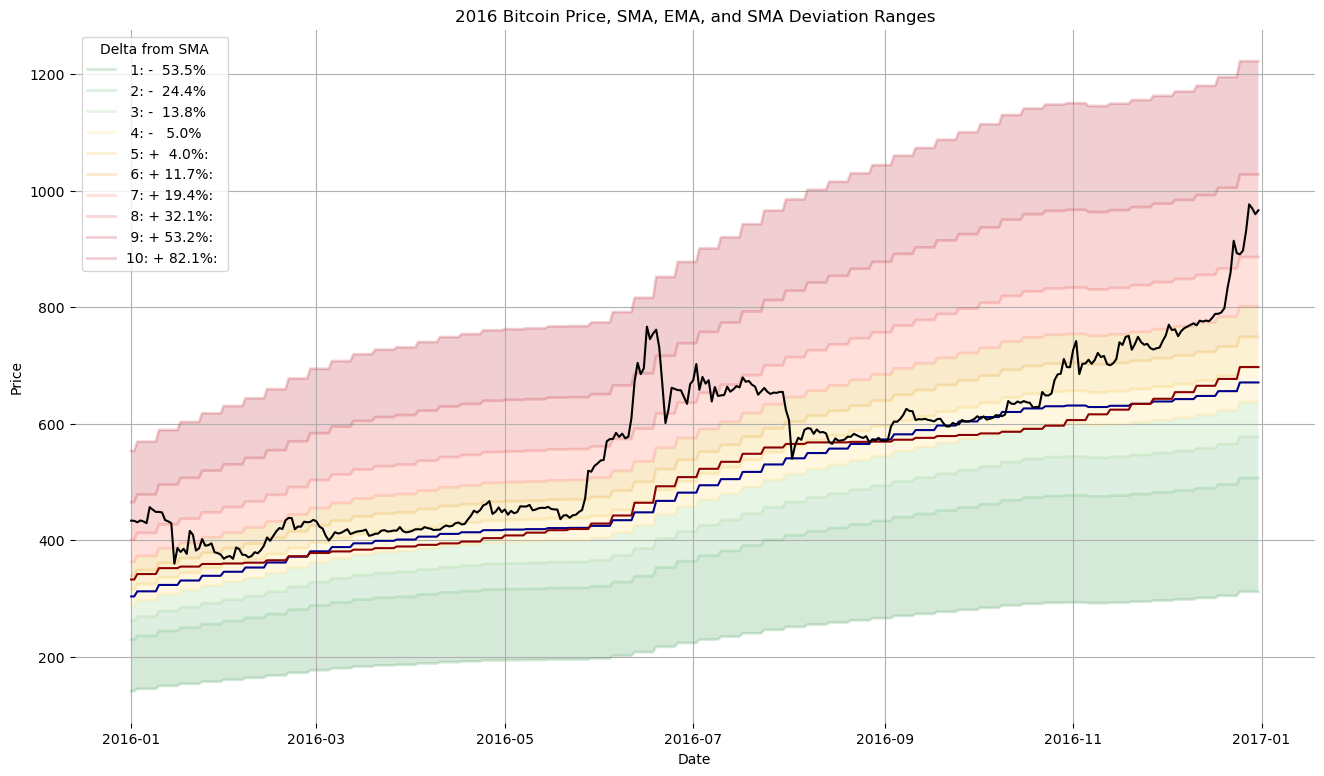

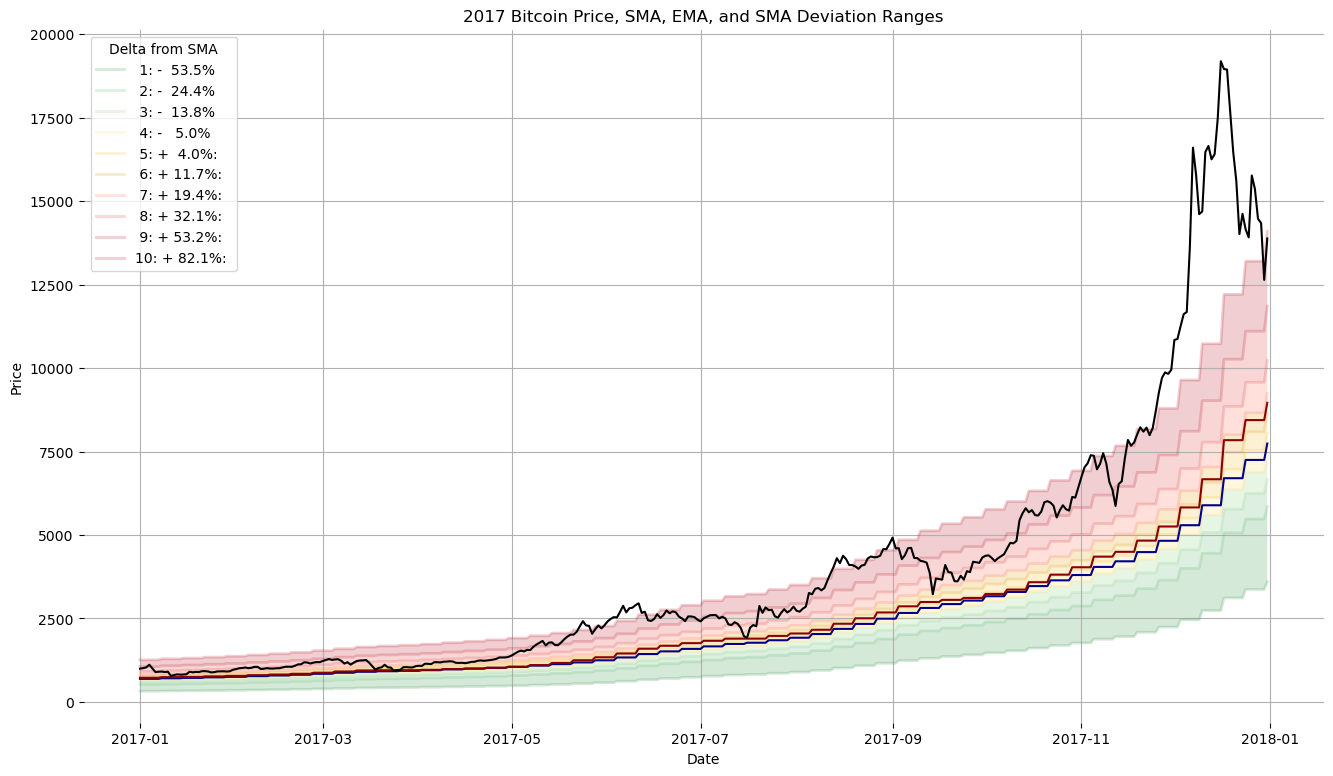

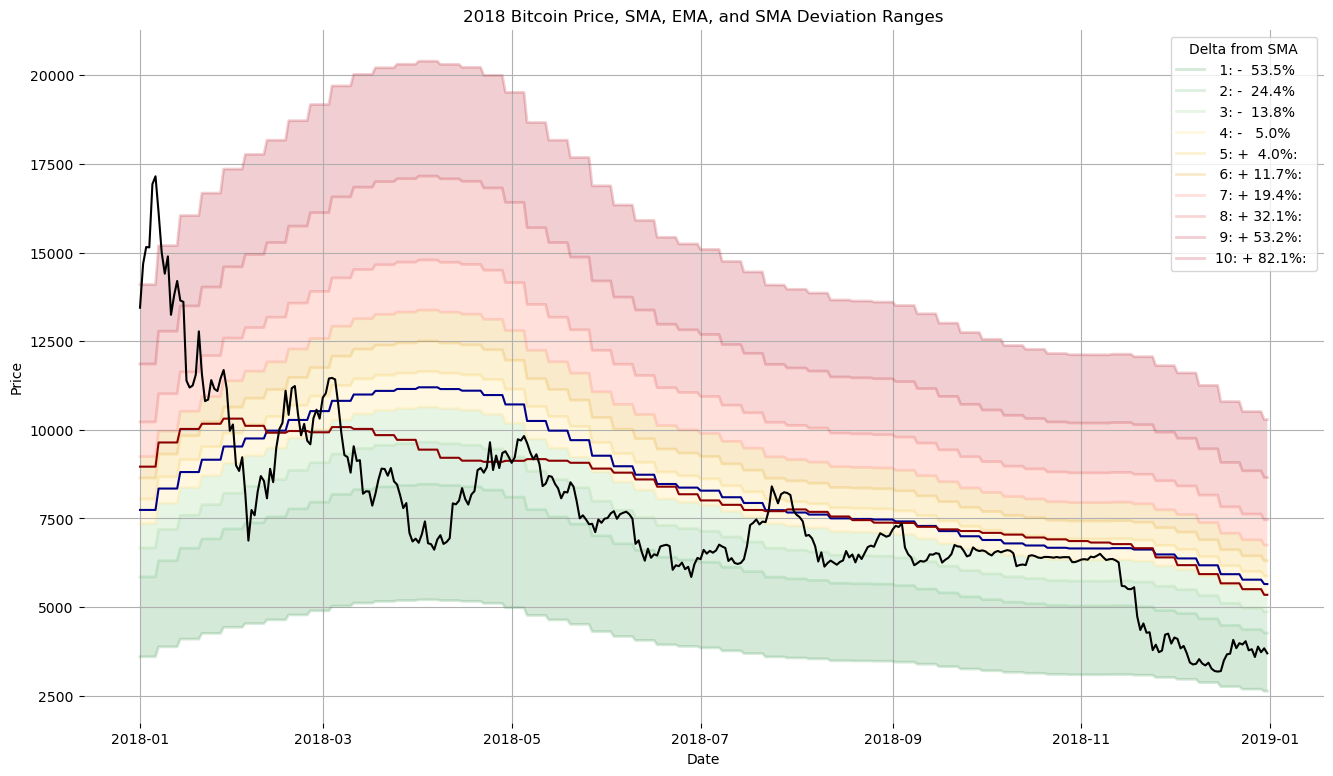

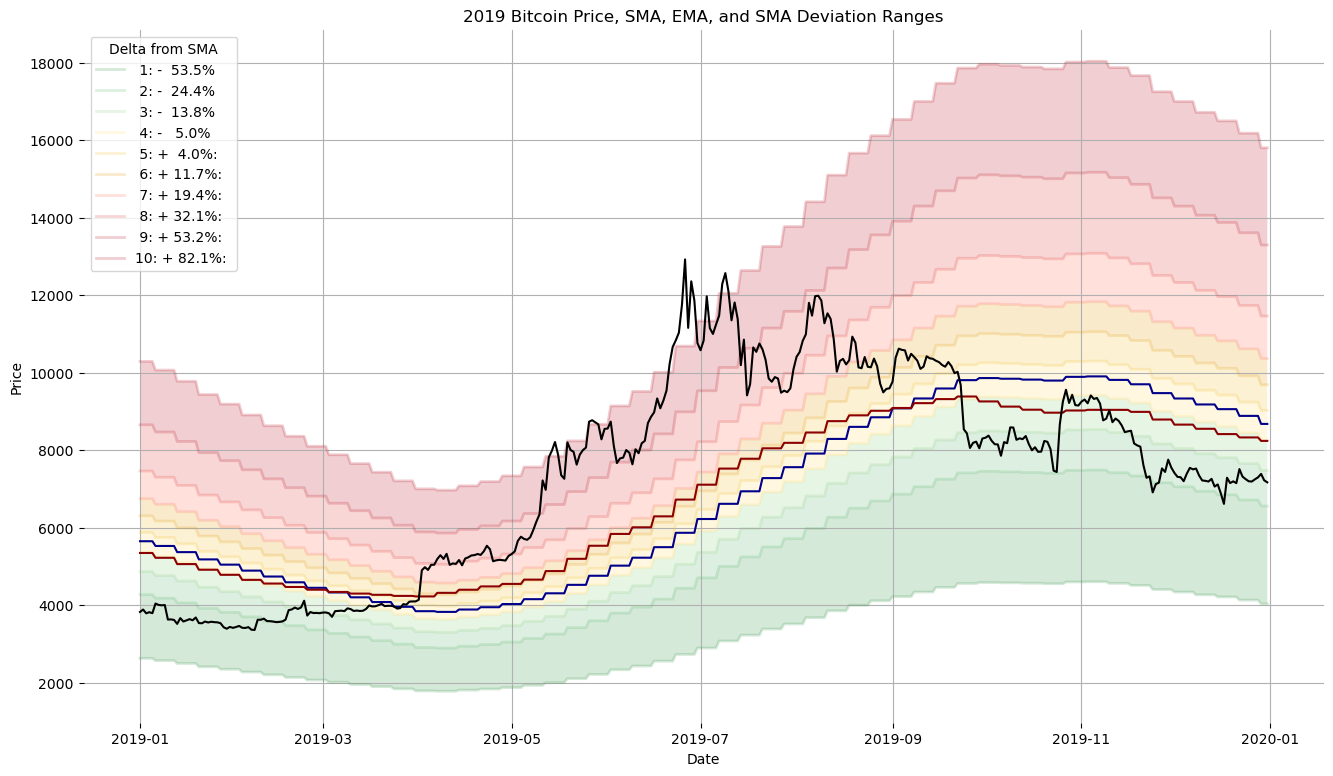

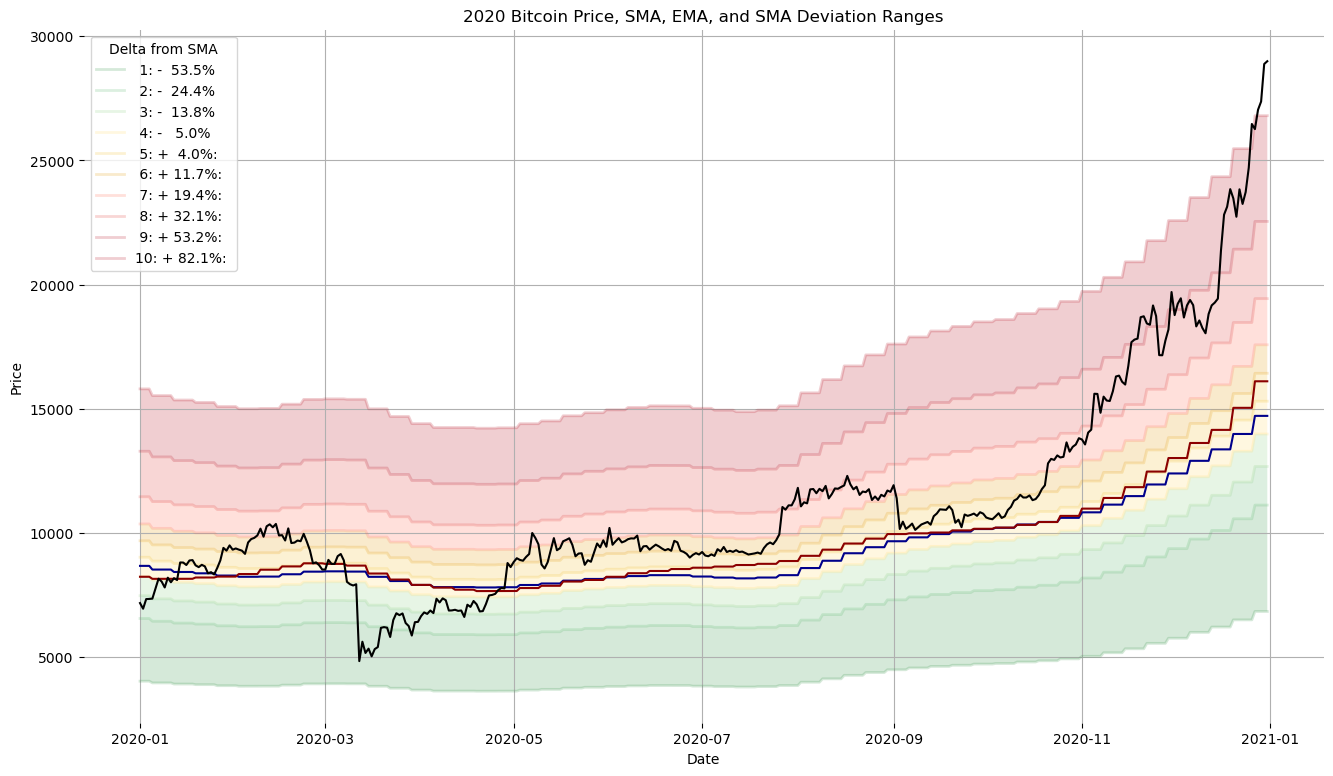

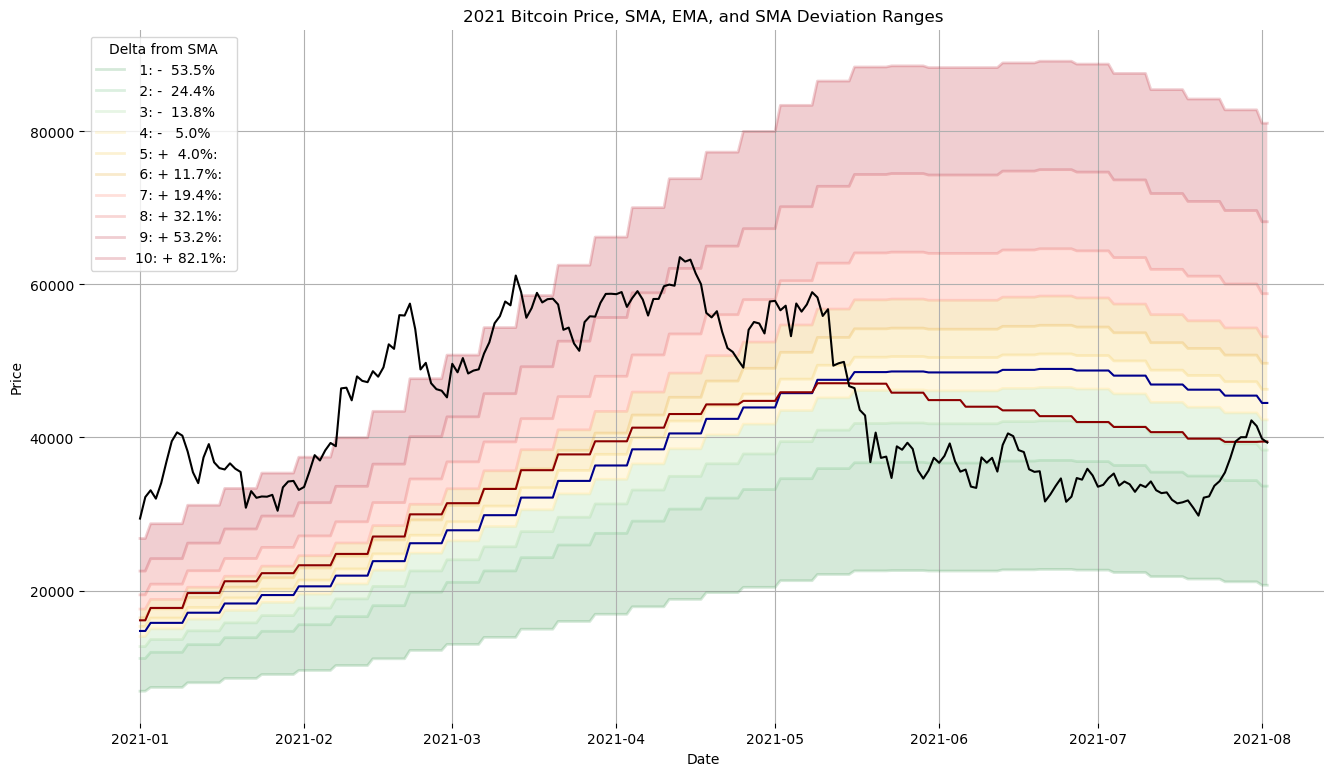

Takeaways from the plots and analysis below:

- Overall volatility (as measured by deviations from the rolling weekly averages) is decreasing as bitcoin and cryptocurrencies gain market cap.

- Deviations from the Exponential moving average are smaller than the Simple moving average. This is expected since the Exponential moving average is itself a more dynamic indicator, responding more quickly to changes in market price.

- There is a significant difference year-to-year in the average delta to the moving average, reflecting the cylical nature of Bitcoin’s pricing.

d['sma_delta'] = ((d['close']-d['sma_20'])/d['sma_20']*100)

d['ema_delta'] = ((d['close']-d['ema_20'])/d['ema_20']*100)

d.tail()

| date | open | high | low | close | sma_20 | ema_20 | comp_20 | sma_delta | ema_delta | |

|---|---|---|---|---|---|---|---|---|---|---|

| 3633 | 2021-07-29 | 40024.80 | 40662.80 | 39239.95 | 40037.51 | 45470.5640 | 39427.295350 | 39427.295350 | -11.948508 | 1.547696 |

| 3634 | 2021-07-30 | 40045.21 | 42335.50 | 38331.37 | 42234.89 | 45470.5640 | 39427.295350 | 39427.295350 | -7.115975 | 7.120942 |

| 3635 | 2021-07-31 | 42236.72 | 42411.32 | 41007.51 | 41490.13 | 45470.5640 | 39427.295350 | 39427.295350 | -8.753870 | 5.231996 |

| 3636 | 2021-08-01 | 41489.16 | 42614.85 | 39424.27 | 39839.00 | 44511.6945 | 39466.505317 | 39466.505317 | -10.497678 | 0.943825 |

| 3637 | 2021-08-02 | 39875.20 | 40459.31 | 38687.62 | 39335.62 | 44511.6945 | 39466.505317 | 39466.505317 | -11.628572 | -0.331636 |

d['year'] = d['date'].astype(str).str[:4]

(d[['year','sma_delta']].groupby('year').mean()

.rename(columns={'sma_delta':'Avg of Daily % Delta to SMA'}).round(1)

.join(d[['year','ema_delta']].groupby('year').mean()

.rename(columns={'ema_delta':'Avg of Daily % Delta to EMA'}).round(1))

.join(d[['year','sma_delta']].groupby('year').std()

.rename(columns={'sma_delta':'S.D. of Daily % Delta to SMA'}).round(1))

.join(d[['year','ema_delta']].groupby('year').std()

.rename(columns={'ema_delta':'S.D. of Daily % Delta to EMA'})).round(1).dropna())

| Avg of Daily % Delta to SMA | Avg of Daily % Delta to EMA | S.D. of Daily % Delta to SMA | S.D. of Daily % Delta to EMA | |

|---|---|---|---|---|

| year | ||||

| 2012 | 28.7 | 15.7 | 25.6 | 22.8 |

| 2013 | 96.9 | 73.3 | 112.8 | 79.2 |

| 2014 | -2.7 | -2.3 | 33.9 | 23.3 |

| 2015 | 0.4 | -2.9 | 22.3 | 20.6 |

| 2016 | 15.0 | 14.0 | 14.2 | 11.1 |

| 2017 | 58.5 | 48.9 | 37.8 | 31.3 |

| 2018 | -11.0 | -9.8 | 24.7 | 19.4 |

| 2019 | 12.7 | 8.1 | 37.5 | 27.3 |

| 2020 | 16.4 | 13.8 | 22.9 | 20.2 |

| 2021 | 35.8 | 28.9 | 54.3 | 40.6 |

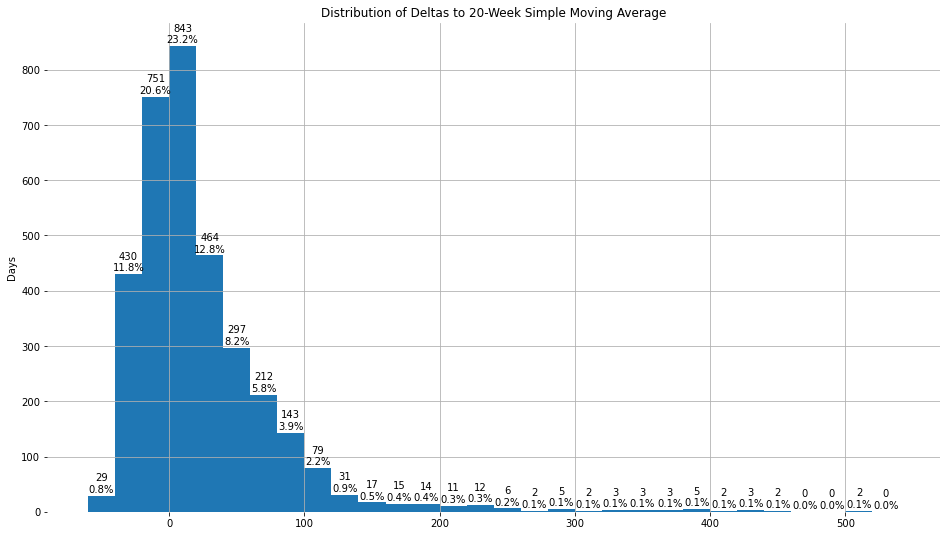

plt.figure(figsize=(16,9))

plt.hist(d['sma_delta'],

bins=np.arange(-60,560,20))

plt.grid()

for spine in plt.gca().spines.values():

spine.set_visible(False)

plt.ylabel('Days')

plt.title('Distribution of Deltas to 20-Week Simple Moving Average')

rects = plt.gca().patches

for n, r in enumerate(rects):

height = r.get_height()

plt.gca().text(r.get_x() + r.get_width() / 2,

height + 22,

str(int(height)) + '\n' + str(((height/d.shape[0])*100.).round(1)) + '%',

ha='center',

va='center')

for sub in [d,

d[d['date']<=dt.date(2014, 12, 12)],

d[(d['date']>dt.date(2014, 12, 12))&

(d['date']<=dt.date(2018, 4, 7))],

d[d['date']>dt.date(2018, 4, 7)]]:

plt.figure(figsize=(16,9))

plt.hlines([0], xmin = sub['date'].min(), xmax = sub['date'].max(), color='k', linewidth=0.5)

plt.plot(pd.to_datetime(sub['date']), sub['sma_delta'], label='Simple M.A.', alpha=0.8, linewidth=1)

plt.plot(pd.to_datetime(sub['date']), sub['ema_delta'], label='Exponential M.A.', alpha=0.8, linewidth=1)

min_date, max_date, delta_date = (str(sub['date'].min()),

str(sub['date'].max()),

str(sub['date'].max() - sub['date'].min())[:9])

plt.title(min_date + ' to ' + max_date + ', ' + delta_date)

plt.legend()

plt.grid(True)

plt.ylabel('% Delta from Daily to Weekly Rolling Average')

for spine in plt.gca().spines.values():

spine.set_visible(False);

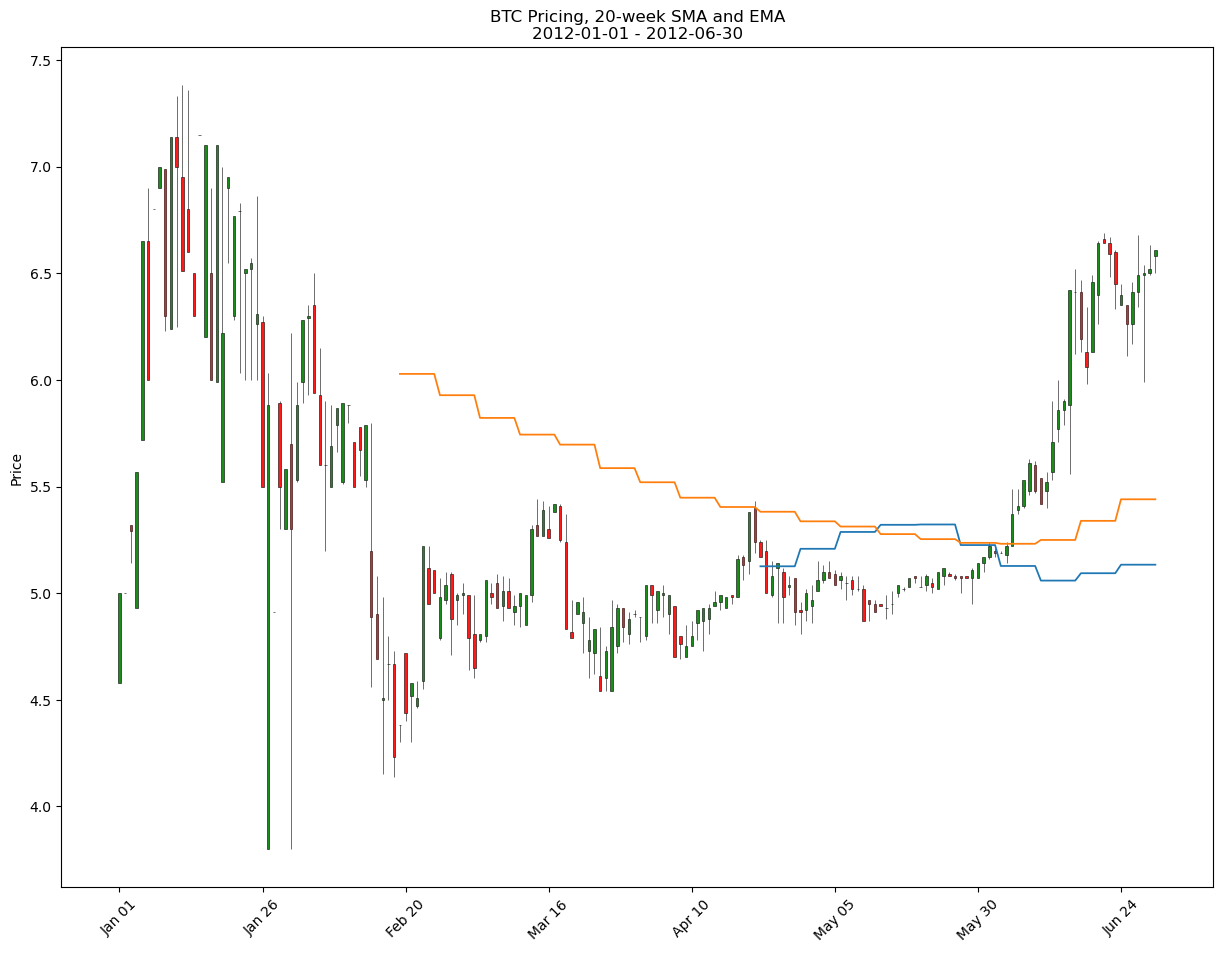

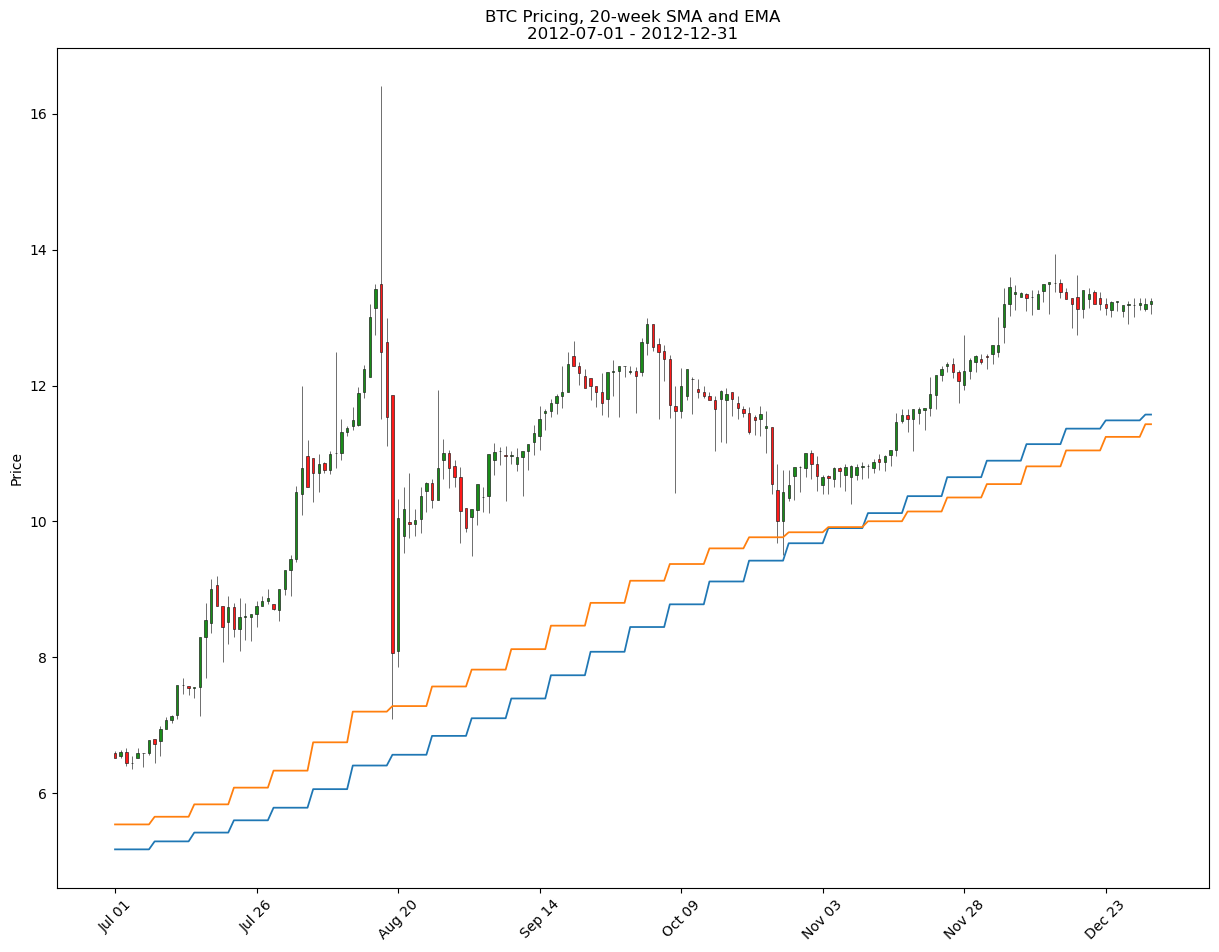

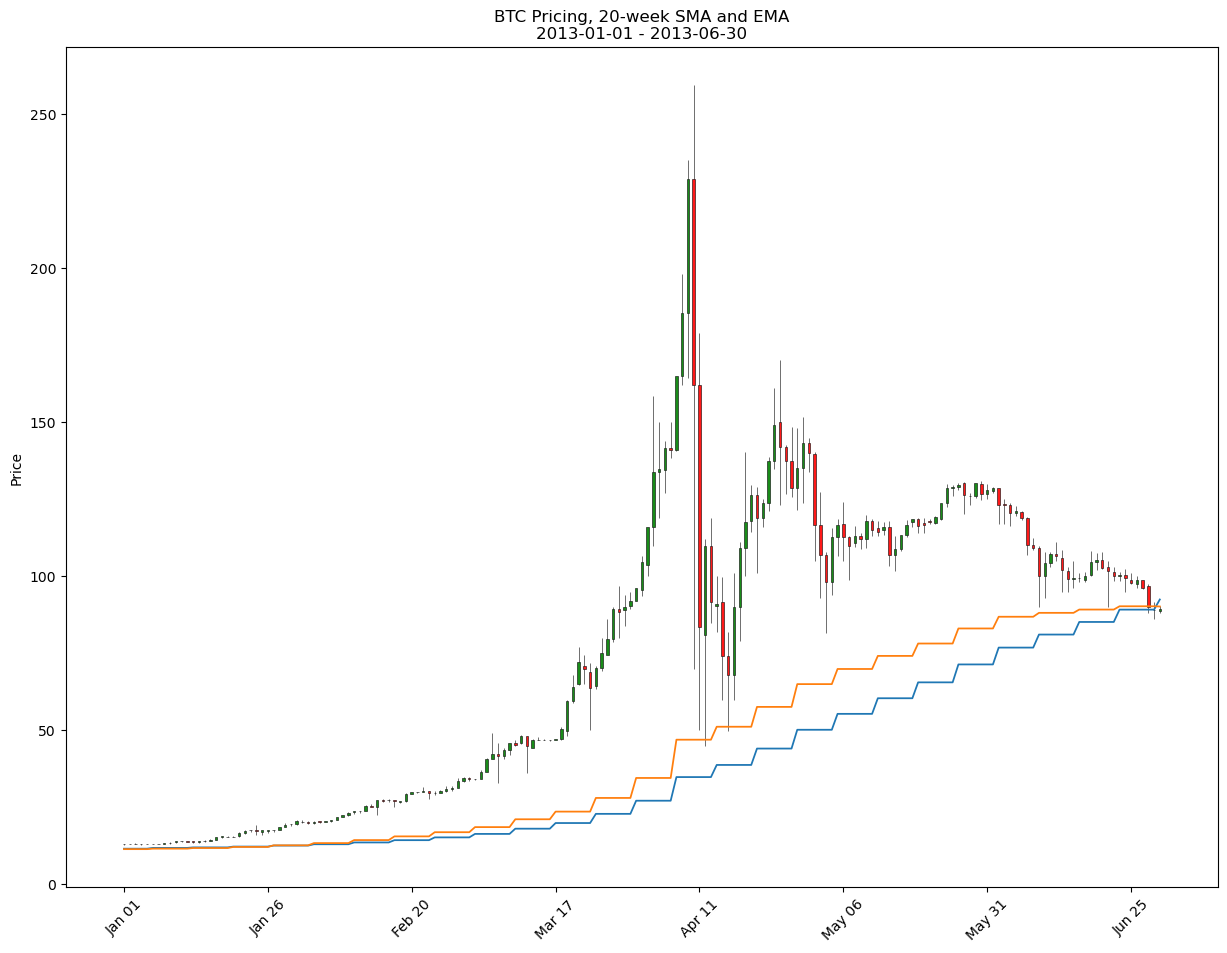

Use mplfinance to create and save figure to file.

mc = mpf.make_marketcolors(up='g',down='r')

s = mpf.make_mpf_style(marketcolors=mc)

for year in ['2012','2013','2014','2015','2016',

'2017','2018','2019','2020','2021']:

sub1 = d[(d['date'].astype(str).str.contains(year))&

(d['date'].astype(str).str[5:7].isin(['01', '02', '03', '04', '05', '06']))].copy()

sub2 = d[(d['date'].astype(str).str.contains(year))&

(d['date'].astype(str).str[5:7].isin(['07', '08', '09', '10', '11', '12']))].copy()

for sub in [sub1, sub2]:

sub['date'] = pd.to_datetime(sub['date'])

fname = ('dynamic-cost-averaging-into-bitcoin/mpf_fig_' +

str(d['date'].min())[:10] +

str(d['date'].max())[:10] +

'.pdf')

title = ('BTC Pricing, 20-week SMA and EMA\n'

+ str(sub['date'].min())[:10] + ' - ' + str(sub['date'].max())[:10])

ma_plots = [

mpf.make_addplot(sub['sma_20'],

title=title),

mpf.make_addplot(sub['ema_20'],

title=title)

]

mpf.plot((sub[['date','open','high','low','close']]

.set_index('date')),

type='candle',

update_width_config=dict(candle_linewidth=0.4),

style=s,

figsize=(16,12),

addplot=ma_plots,

savefig=dict(fname=fname,

dpi=400,

pad_inches=0.25,

bbox_inches='tight'))

mpf.plot((sub[['date','open','high','low','close']]

.set_index('date')),

type='candle',

update_width_config=dict(candle_linewidth=0.4),

style=s,

figsize=(16,12),

addplot=ma_plots)

Cut into Equally Populated Thresholds

d['qcut sma labels'] = pd.qcut(d['sma_delta'],

q=10,

labels=[1,2,3,4,5,6,7,8,9,10])

d['qcut sma thresholds'] = pd.qcut(d['sma_delta'],

q=10)

d['lowerbound'] = (d['qcut sma thresholds']

.astype(str)

.str.replace('(','',regex=False)

.str.split(',',

expand=True)[0])

d['lowerbound'] = d['lowerbound'].astype(float)

(d[['qcut sma labels','qcut sma thresholds']]

.sort_values('qcut sma labels')

.drop_duplicates()

.dropna()

.reset_index(drop=True)

.rename(columns={'qcut sma thresholds' : 'Range of % Daily Deviations from the 20-week SMA',

'qcut sma labels' : '* 10 = Percentile'}))

| * 10 = Percentile | Range of % Daily Deviations from the 20-week SMA | |

|---|---|---|

| 0 | 1 | (-53.512, -24.413] |

| 1 | 2 | (-24.413, -13.839] |

| 2 | 3 | (-13.839, -4.966] |

| 3 | 4 | (-4.966, 4.005] |

| 4 | 5 | (4.005, 11.651] |

| 5 | 6 | (11.651, 19.45] |

| 6 | 7 | (19.45, 32.078] |

| 7 | 8 | (32.078, 53.195] |

| 8 | 9 | (53.195, 82.132] |

| 9 | 10 | (82.132, 557.602] |

sma_th = (d[['lowerbound']]

.drop_duplicates()

.dropna()

.sort_values('lowerbound')

.reset_index(drop=True))

sma_th = sma_th.T

sma_th.columns += 1

for col in sma_th:

d[col] = sma_th[col].values[0]

sma_th

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| lowerbound | -53.512 | -24.413 | -13.839 | -4.966 | 4.005 | 11.651 | 19.45 | 32.078 | 53.195 | 82.132 |

for col in sma_th.columns:

d[col] = d['sma_20'] + (d[col] * 0.01 * d['sma_20'])

d[sma_th.columns].tail(1).round(0).astype(int)

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 3637 | 20693 | 33645 | 38352 | 42301 | 46294 | 49698 | 53169 | 58790 | 68190 | 81070 |

sma_th.head()

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | |

|---|---|---|---|---|---|---|---|---|---|---|

| lowerbound | -53.512 | -24.413 | -13.839 | -4.966 | 4.005 | 11.651 | 19.45 | 32.078 | 53.195 | 82.132 |

for year in ['2012','2013','2014','2015','2016',

'2017','2018','2019','2020','2021']:

sub = d[d['date'].astype(str).str.contains(year)].copy()

plt.figure(figsize=(16,9))

mpl.rcParams['axes.prop_cycle'] = mpl.cycler(color=['#309143', '#51b364', '#8ace7e', # Dark -> Light Green

'#ffda66', '#f0bd27', '#e39802', # Dark -> Light Yellow

'#ff684c', '#e03531', '#b60a1c', # Dark -> Light Red

'#b60a1c'])

for n, col in enumerate(sma_th.columns):

if sma_th[col].values[0].round(1) >= 0:

label = '{:2}: + {:4.1f}%: '.format(n+1, sma_th[col].values[0])

else:

label = '{:2}: - {:5.1f}%'.format(n+1, abs(sma_th[col].values[0]))

plt.plot(pd.to_datetime(sub['date']),

sub[col],

alpha=0.2,

label=label,

linewidth=2)

for col in sma_th.columns[1:]:

plt.fill_between(pd.to_datetime(sub['date']),

sub[col],

sub[col-1],

alpha=0.2)

plt.plot(pd.to_datetime(sub['date']),

sub['sma_20'],

color='darkblue',

alpha=1,

linewidth=1.5)

plt.plot(pd.to_datetime(sub['date']),

sub['ema_20'],

color='darkred',

alpha=1,

linewidth=1.5)

plt.plot(pd.to_datetime(sub['date']),

sub['close'],

color='k',

linewidth=1.5);

for spine in plt.gca().spines.values():

spine.set_visible(False)

plt.grid()

plt.title(year + ' Bitcoin Price, SMA, EMA, and SMA Deviation Ranges')

plt.legend(title='Delta from SMA')

plt.ylabel('Price')

plt.xlabel('Date');

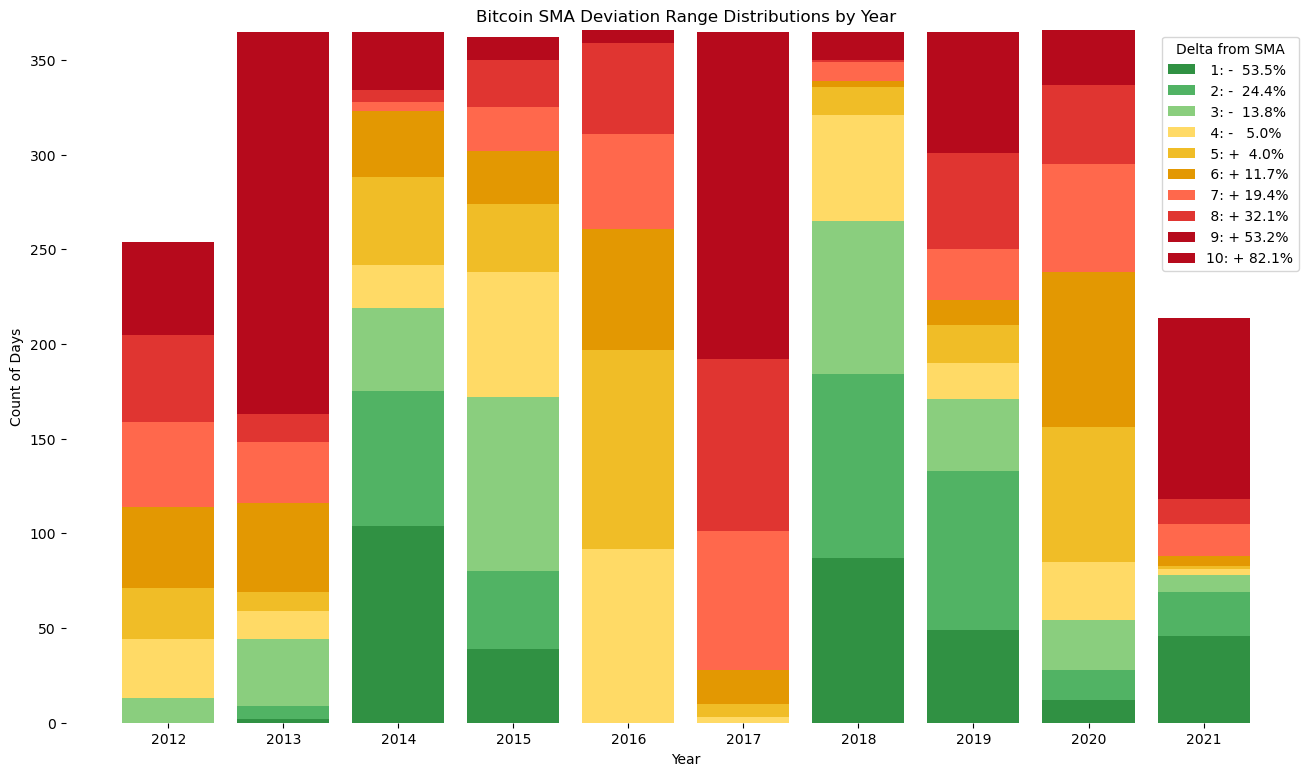

d['year'] = d['date'].astype(str).str[:4]

totals = (pd.DataFrame(d[~d['qcut sma labels'].isna()]

['year'].value_counts())

.rename(columns={'year':'total'}))

category_counts = (pd.pivot_table(d[['year','close','qcut sma labels']],

index='year',

columns='qcut sma labels',

aggfunc=len))

category_counts.columns = category_counts.columns.droplevel()

cats = (totals

.join(category_counts)

.reset_index()

.rename(columns={'index':'year'})

.sort_values('year')

.reset_index(drop=True))

cats = cats.drop(columns=['total']).fillna(0)

labels = []

for n, col in enumerate(sma_th.columns):

if sma_th[col].values[0].round(1) >= 0:

labels.append('{:2}: + {:4.1f}%'.format(n+1, sma_th[col].values[0]))

else:

labels.append('{:2}: - {:5.1f}%'.format(n+1, abs(sma_th[col].values[0])))

plt.figure(figsize=(16,9))

bottoms = [0] * cats.shape[0]

plt.bar(cats['year'], cats[1], label=labels[0])

for col in cats.columns[2:]:

bottoms += cats[col-1].values

plt.bar(cats['year'], cats[col], bottom=bottoms, label=labels[col-1])

for spine in plt.gca().spines.values():

spine.set_visible(False)

plt.title('Bitcoin SMA Deviation Range Distributions by Year')

plt.legend(title='Delta from SMA')

plt.ylabel('Count of Days')

plt.xlabel('Year');

th = (d[['qcut sma labels','qcut sma thresholds']]

.sort_values('qcut sma labels')

.drop_duplicates()

.dropna()

.reset_index(drop=True)

.rename(columns={'qcut sma thresholds' : 'Range of % Daily Deviations',

'qcut sma labels' : '* 10 = Percentile'}))

th['Range of % Daily Deviations'] = (th['Range of % Daily Deviations'].astype(str)

.str.replace('(','',regex=False)

.str.replace(' ','',regex=False)

.str.replace(']','',regex=False))

th['lower bound'] = (th['Range of % Daily Deviations']

.str.split(',',expand=True)[0]).astype(float).round(1)

th['upper bound'] = (th['Range of % Daily Deviations']

.str.split(',',expand=True)[1]).astype(float).round(1)

th = (th

.drop(columns=['Range of % Daily Deviations'])

.set_index('* 10 = Percentile'))

th

| lower bound | upper bound | |

|---|---|---|

| * 10 = Percentile | ||

| 1 | -53.5 | -24.4 |

| 2 | -24.4 | -13.8 |

| 3 | -13.8 | -5.0 |

| 4 | -5.0 | 4.0 |

| 5 | 4.0 | 11.7 |

| 6 | 11.7 | 19.4 |

| 7 | 19.4 | 32.1 |

| 8 | 32.1 | 53.2 |

| 9 | 53.2 | 82.1 |

| 10 | 82.1 | 557.6 |

- 50% of the time, BTC has traded at least 11.7% above the 20-week simple moving average.

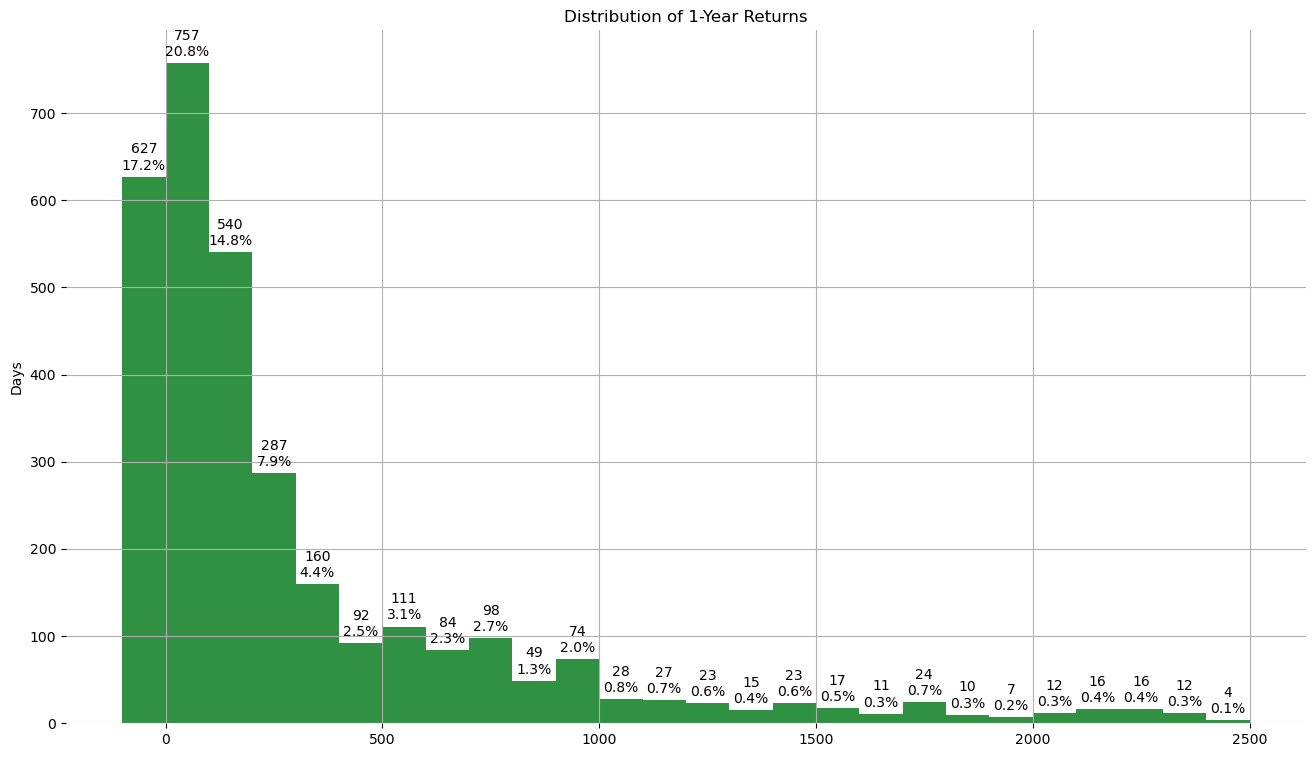

Calculate Average Annual 1-Year ROI

one_time_delta = pd.Timedelta(weeks = 1 * 52 )

d['current_day'] = pd.to_datetime(d['date'])

d['one_time_delta_later'] = d['current_day'] + one_time_delta

s = pd.merge(d[['one_time_delta_later','close']], (d[['current_day','close']]

.rename(columns={'close' : 'one_time_delta_later_close'})),

left_on='one_time_delta_later',

right_on='current_day',

how='left')

s = s.drop(columns=['one_time_delta_later'])

s['1_year_return_percent'] = (((s['one_time_delta_later_close'] / s['close'])-1) * 100)

s['1_year_return_percent'].describe().round(1)

count 3238.0

mean 465.6

std 1008.9

min -83.4

25% 25.5

50% 146.2

75% 471.7

max 9007.0

Name: 1_year_return_percent, dtype: float64

plt.figure(figsize=(16,9))

plt.hist(s['1_year_return_percent'],

bins=np.arange(-100,2600,100))

plt.grid()

for spine in plt.gca().spines.values():

spine.set_visible(False)

plt.ylabel('Days')

plt.title('Distribution of 1-Year Returns')

rects = plt.gca().patches

for n, r in enumerate(rects):

height = r.get_height()

plt.gca().text(r.get_x() + r.get_width() / 2,

height + 22,

str(int(height)) + '\n' + str(((height/d.shape[0])*100.).round(1)) + '%',

ha='center',

va='center')

d['date'] = d['date'].astype(str)

s['current_day'] = s['current_day'].astype(str)

d = pd.merge(d, s[['current_day','1_year_return_percent']],

left_on='date',

right_on='current_day',

how='left',

indicator=True)

d = d.drop(columns=['current_day_x','one_time_delta_later','current_day_y','_merge'])

d['3-tile'] = pd.qcut(d['sma_delta'],

q=3,

labels=list(np.arange(1,4,1)))

d['3-tile labels'] = pd.qcut(d['sma_delta'],

q=3)

d[['3-tile','3-tile labels']].drop_duplicates().dropna().sort_values('3-tile').reset_index(drop=True)

| 3-tile | 3-tile labels | |

|---|---|---|

| 0 | 1 | (-53.512, -2.844] |

| 1 | 2 | (-2.844, 27.489] |

| 2 | 3 | (27.489, 557.602] |

d[['3-tile','1_year_return_percent']].groupby('3-tile').median()

| 1_year_return_percent | |

|---|---|

| 3-tile | |

| 1 | 56.024845 |

| 2 | 113.622033 |

| 3 | 312.150165 |

d[['3-tile','1_year_return_percent']].groupby('3-tile').mean()

| 1_year_return_percent | |

|---|---|

| 3-tile | |

| 1 | 177.901615 |

| 2 | 256.470660 |

| 3 | 975.509322 |

Surprisingly, the higher 3-tiles appear to have higher average and median 1-year returns. This may look quite a bit different over longer time spans as trading significantly below the 20-week SMA may indicate BTC is in a long-duration bear market, suppressing the 1-year returns.

Calculate Actual Percentiles

Buy, Sell, Hold

percentiles = (pd.DataFrame(pd.qcut(d['sma_delta'],

q=100,

labels=list(np.arange(1,101,1))))

.rename(columns={'sma_delta':'percentile'}))

percentiles['thresholds'] = pd.qcut(d['sma_delta'],

q=100)

percentiles = (percentiles[['percentile','thresholds']]

.sort_values('percentile')

.drop_duplicates()

.dropna()

.reset_index(drop=True))

percentiles['thresholds'] = (percentiles['thresholds'].astype(str)

.str.replace('(','',regex=False)

.str.replace(' ','',regex=False)

.str.replace(']','',regex=False))

percentiles['lower bound'] = (percentiles['thresholds']

.str.split(',',expand=True)[0]).astype(float).round(1)

percentiles['upper bound'] = (percentiles['thresholds']

.str.split(',',expand=True)[1]).astype(float).round(1)

percentiles = (percentiles

.drop(columns=['thresholds'])

.set_index('percentile'))

pd.set_option('display.max_rows', 100)

percentiles

| lower bound | upper bound | |

|---|---|---|

| percentile | ||

| 1 | -53.5 | -39.3 |

| 2 | -39.3 | -35.6 |

| 3 | -35.6 | -33.5 |

| 4 | -33.5 | -31.9 |

| 5 | -31.9 | -31.2 |

| 6 | -31.2 | -29.9 |

| 7 | -29.9 | -28.3 |

| 8 | -28.3 | -27.0 |

| 9 | -27.0 | -25.9 |

| 10 | -25.9 | -24.4 |

| 11 | -24.4 | -23.1 |

| 12 | -23.1 | -21.5 |

| 13 | -21.5 | -20.5 |

| 14 | -20.5 | -19.3 |

| 15 | -19.3 | -18.4 |

| 16 | -18.4 | -17.3 |

| 17 | -17.3 | -16.4 |

| 18 | -16.4 | -15.6 |

| 19 | -15.6 | -14.7 |

| 20 | -14.7 | -13.8 |

| 21 | -13.8 | -13.0 |

| 22 | -13.0 | -12.0 |

| 23 | -12.0 | -10.9 |

| 24 | -10.9 | -9.5 |

| 25 | -9.5 | -8.5 |

| 26 | -8.5 | -7.9 |

| 27 | -7.9 | -7.1 |

| 28 | -7.1 | -6.1 |

| 29 | -6.1 | -5.6 |

| 30 | -5.6 | -5.0 |

| 31 | -5.0 | -4.5 |

| 32 | -4.5 | -3.9 |

| 33 | -3.9 | -3.2 |

| 34 | -3.2 | -2.0 |

| 35 | -2.0 | -0.7 |

| 36 | -0.7 | 0.4 |

| 37 | 0.4 | 1.6 |

| 38 | 1.6 | 2.8 |

| 39 | 2.8 | 3.4 |

| 40 | 3.4 | 4.0 |

| 41 | 4.0 | 4.7 |

| 42 | 4.7 | 5.5 |

| 43 | 5.5 | 6.3 |

| 44 | 6.3 | 7.0 |

| 45 | 7.0 | 7.6 |

| 46 | 7.6 | 8.4 |

| 47 | 8.4 | 9.2 |

| 48 | 9.2 | 10.2 |

| 49 | 10.2 | 10.9 |

| 50 | 10.9 | 11.7 |

| 51 | 11.7 | 12.3 |

| 52 | 12.3 | 13.2 |

| 53 | 13.2 | 13.9 |

| 54 | 13.9 | 14.5 |

| 55 | 14.5 | 15.2 |

| 56 | 15.2 | 15.9 |

| 57 | 15.9 | 16.6 |

| 58 | 16.6 | 17.5 |

| 59 | 17.5 | 18.4 |

| 60 | 18.4 | 19.4 |

| 61 | 19.4 | 20.3 |

| 62 | 20.3 | 21.2 |

| 63 | 21.2 | 22.9 |

| 64 | 22.9 | 24.1 |

| 65 | 24.1 | 25.1 |

| 66 | 25.1 | 26.6 |

| 67 | 26.6 | 27.8 |

| 68 | 27.8 | 29.4 |

| 69 | 29.4 | 30.8 |

| 70 | 30.8 | 32.1 |

| 71 | 32.1 | 33.8 |

| 72 | 33.8 | 35.7 |

| 73 | 35.7 | 37.6 |

| 74 | 37.6 | 39.3 |

| 75 | 39.3 | 41.9 |

| 76 | 41.9 | 44.0 |

| 77 | 44.0 | 45.9 |

| 78 | 45.9 | 48.5 |

| 79 | 48.5 | 50.6 |

| 80 | 50.6 | 53.2 |

| 81 | 53.2 | 55.1 |

| 82 | 55.1 | 57.2 |

| 83 | 57.2 | 59.5 |

| 84 | 59.5 | 61.7 |

| 85 | 61.7 | 66.2 |

| 86 | 66.2 | 70.8 |

| 87 | 70.8 | 73.6 |

| 88 | 73.6 | 76.7 |

| 89 | 76.7 | 79.1 |

| 90 | 79.1 | 82.1 |

| 91 | 82.1 | 85.9 |

| 92 | 85.9 | 90.0 |

| 93 | 90.0 | 96.1 |

| 94 | 96.1 | 103.0 |

| 95 | 103.0 | 110.5 |

| 96 | 110.5 | 120.6 |

| 97 | 120.6 | 149.9 |

| 98 | 149.9 | 185.7 |

| 99 | 185.7 | 254.6 |

| 100 | 254.6 | 557.6 |

percentiles.loc[[1,33,67,100],:]

| lower bound | upper bound | |

|---|---|---|

| percentile | ||

| 1 | -53.5 | -39.3 |

| 33 | -3.9 | -3.2 |

| 67 | 26.6 | 27.8 |

| 100 | 254.6 | 557.6 |

If the space of available actions is:

- Take no action (hodl) and store free cash flow as cash reserves,

- Buy BTC using any available free cash flow, and

- Buy BTC using any available free cash flow + a certain proportion of reserves.

Then, it seems like reasonable guidelines for each of these steps is to:

| Action | Guideline |

|---|---|

| Save 1x free cash flow as reserves | price more than 27.8% above 20-week SMA |

| Buy BTC using 1x free cash flow | price less than 27.8% above 20-week SMA |

| Buy BTC using 2x free cash flow | price more than 3.2% below 20-week SMA |